Editors’ Note: This is a revised version of a lecture given at the Adam Smith Tercentenary celebrations at the University of Glasgow on June 8, 2023. Several of the arguments are developed further in the author’s forthcoming book, Economics in America: An Immigrant Economist Explores the Land of Inequality.

One of the many pleasures of being a Scottish economist is being able to acknowledge Adam Smith as a predecessor. Smith was one of Scotland’s greatest thinkers in both economics and philosophy. I grew up in Edinburgh and was brought up to recognize Scots’ many achievements but was never told about Smith. Many years later, when I was inducted into the Royal Society of Edinburgh, Smith was mentioned, but only as a conveniently placed friend of the Duke of Buccleuch who helped the Society obtain its Royal Charter—that is, as a sort of well-connected lobbyist. Smith and David Hume were internationally renowned in their lifetime; the mathematician Michael Atiyah notes that their fame induced Benjamin Franklin in 1771 “to undertake the lengthy and tiresome journey by stagecoach to a small, cold, city on the northern fringes of civilization,” but Smith’s fame seems to have waned with time, at least at home.

Smith was not only a great thinker but also a great writer. He was an empirical economist whose sketchy data were more often right than wrong; he was skeptical, especially about wealth; and he was a balanced and humane thinker who cared about justice, noting how much more important it was than beneficence. But the story I want to tell is about economic failure and about economics failure, and about how Smith’s insights and humanity need to be brought back into the mainstream of economics. Much of the evidence that I use draws on my work with Anne Case as well as her work with Lucy Kraftman on Scotland in relation to the rest of the UK.

In her recent book Adam Smith’s America (2022), political scientist Glory Liu reports that in 1976, at an event celebrating the bicentenary of the publication of Smith’s The Wealth of Nations, George Stigler, the eminent Chicago economist, said “I bring you greetings from Adam Smith, who is alive and well and living in Chicago!” Stigler might also have noted that the U.S. economy was flourishing too, as it had been for three decades, and might have been happy to connect the flourishing of Smith and the flourishing of the economy.

At an address to the American Philosophical Society earlier this year, the economist Benjamin Friedman updated Stigler’s quip, claiming that Smith is alive, but not so well, and is being held prisoner in Chicago. To be fair, the economics department in Chicago has changed greatly since 1976, so that the “is being held prisoner” should be more accurately put as “was being held prisoner.” And it was in this earlier period where much of the damage was done. In further contrast to 1976, the U.S. economy has not done so well over the last three decades, at least not for the less-educated majority, the currently sixty percent of the adult population without a four-year college degree. I will argue that the distorted transformation of Smith into Chicago economics, especially libertarian Chicago economics, as well as its partial but widespread acceptance by the profession, bear some responsibility for recent failures.

This failure and distress can be traced in a wide range of material and health outcomes that matter in people’s lives. Here I focus on one: mortality. The United States has seen an epidemic of what Case and I have called “deaths of despair”—deaths from suicide, drug overdose, and alcoholic liver disease. These deaths, all of which are to a great extent self-inflicted, are seen in few other rich countries, but in none, except in Scotland, do we see anything like the scale of the tragedy that is being experienced in America. Elevated adult mortality rates are often a measure of societal failure, especially so when those deaths come not from an infectious disease, like COVID-19, or from a failing health system, but from personal affliction. As Emile Durkheim argued long ago, suicide, which is the archetypal self-inflicted destruction, is more likely to happen during times of intolerable social change when people have lost the relationships with others and the social framework that they need to support their flourishing.

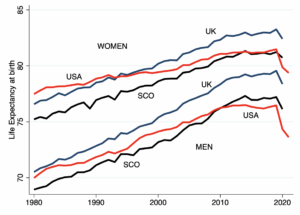

Figure 1: Life expectancy at birth in the United States, Scotland, and UK, by gender (Source: Human Mortality Database)

Drug deaths account for more than half of all deaths of despair in the United States and more in Scotland. Figure 1 shows trends in life expectancy for men and women in the United States, in the UK, and in Scotland from 1980 to 2019. Upward trends are good and dominate the picture. The increases are more rapid for men than for women: women are less likely to die of heart attacks than are men, and so have benefited less from the decline in heart disease that had long been a leading cause of falling mortality. Scotland does worse than the UK, and is more like the United States, though Scots women do worse than American women; a history of heavy smoking does much to explain these Scottish outcomes. If we focus on the years just before the pandemic, we see a slowdown in progress in all three countries; this is happening in several other rich countries, though not all. We see signs of falling life expectancy even before the pandemic. (I do not discuss the pandemic here because it raises issues beyond my main argument.) Falling life expectancy is something that rich countries have long been used to not happening.

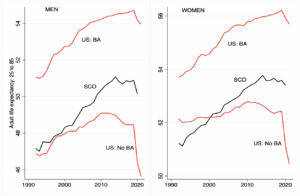

Figure 2: Adult life expectancy in Scotland and the United States (Source: U.S. Vital Statistics and Scottish life tables)

Figure 2 shows life expectancy at twenty-five and, for the United States, divides the data into those with and without a four-year college degree. In Scotland, we do not (yet) have the data to make the split. The remarkable thing here is that, in the United States, those without a B.A. have experienced falling (adult) life expectancy since 2010, while those with the degree have continued to see improvements. Adult mortality rates are going in opposite directions for the more and less educated. The gap, which was about 2.5 years in 1992, doubled to 5 years in 2019 and reached 7 years in 2021. Whatever plague is afflicting the United States, a bachelor’s degree is an effective antidote. The Scots look more like the less-educated Americans, though they do a little better, but note again that we cannot split the Scottish data by educational attainment.

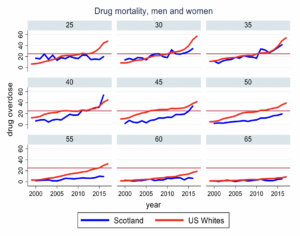

Figure 3: Drug overdose deaths, by age group, in the United States and Scotland (Source: U.S. Vital Statistics and WHO mortality database)

Figure 3 presents trends in overdose deaths by age groups. U.S. whites are shown in red, and Scots in blue. Scotland is not as bad as the United States, but it is close, especially in the midlife age groups. No other country in the rich world looks like this.

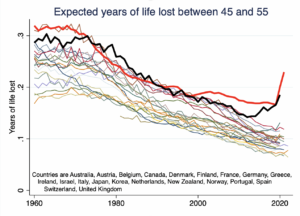

Figure 4: Expected years of life lost between 45 and 55 (Source: Human Mortality Database)

Finally, Figure 4 looks at mortality in midlife. Here, I return to all-cause mortality and show the number of years people can expect to lose between forty-five and fifty-five if each year’s mortality rates were to hold over the five-year span. In addition to the United States and Scotland, the lighter lines show years of life lost in midlife for eighteen other rich countries. Once again, Scotland and the United States are competing for the wooden spoon. In the most recent years, they have both moved even further away from the other countries.

I am now going to focus on the United States but will say a few words about Scotland later.

The reversal of mortality decline in the United States has many proximate causes; one of the most important has been the slowing—and for the less-educated an actual reversal—of the previously long-established decline in mortality from heart disease. But opioids and opioid manufacturers were an important part of the story.

In 1995 the painkiller OxyContin, manufactured by Purdue Pharmaceutical, a private company owned by the Sackler family, was approved by the Food and Drug Administration (FDA). OxyContin is an opioid; think of it as a half-strength dose of heroin in pill form with an FDA label of approval—effective for pain relief, and highly addictive. Traditionally, doctors in the United States did not prescribe opiates, even for terminally ill cancer patients—unlike in Britain—but they were persuaded by relentless marketing campaigns and a good deal of misdirection that OxyContin was safe for chronic pain. Chronic pain had been on the rise in the United States for some time, and Purdue and their distributors targeted communities where pain was prevalent: a typical example is a company coal town in West Virginia where the company and the coal had recently vanished. Overdose deaths began to rise soon afterwards. By 2012 enough opioid prescriptions were being written for every American adult to have a month’s supply. In time, physicians began to realize what they had done and cut back on prescriptions. Or at least most did; a few turned themselves into drug dealers and operated pill mills, selling pills for money or, in some cases, for sex. Many of those doctors are now in jail. (Barbara Kingsolver’s recent Demon Copperhead, set in southwest Virginia, is a fictionalized account of the social devastation, especially among children and young people.)

In 2010 Purdue reformulated Oxycontin to make it harder to abuse, and around the same time the docs pulled back, but by then a large population of people had become addicted to the drugs, and when prescribers denied them pills, black market suppliers flooded the illicit market with cheap heroin and fentanyl, which is more than thirty times stronger than heroin. Sometimes dealers even met disappointed patients outside pain clinics. The epidemic of addiction and death that had been sparked by pharma companies in search of profit was enabled by some members of Congress, who, as Case and I describe in detail in our book, changed the law to make life easier for distributors and shut down investigations by the Drug Enforcement Agency. None of these congressional representatives was punished by voters.

Purdue wasn’t alone. Johnson & Johnson, maker of Band-Aids and baby powder, farmed opium poppies in Tasmania to feed demand at the same time, ironically, that the U.S. military was bombing opium fields in Afghanistan—perhaps an early attempt at friend-shoring. Distribution companies poured millions of pills into small towns. But once the spark ignited the fire, the fuel to maintain it switched from legal prescription drugs to illegal street drugs. Of the 107,000 overdoses in the United States in 2021, more than 70,000 involved synthetic opioids other than methadone—primarily fentanyl.

The Scots can perhaps congratulate themselves that Scottish doctors are not like American doctors. Scotland may have an epidemic of drug deaths, but at least it was not sparked by the greed of doctors and pharma, and not enabled by members of the Scottish parliament. Still, the Scots have their own dark episodes. Let’s go back to one of Smith’s most disliked institutions, the East India Company, and two Scotsmen, James Matheson and William Jardine, both graduates of Edinburgh University, one of whom (Jardine) was a physician. In 1817 Jardine left the East India Company and partnered with Matheson and Jamsetjee Jeejeebhoy, a Parsi merchant in Bombay in Western India to pursue the export of opium to China—which, by that date, had become the main source of Company profits. China, whose empire was in a state of some decay, tried to stop the importation of opium and, on the orders of the Daoguang Emperor, Viceroy Lin Zexu—who today is represented by a statue in Chinatown in New York with the inscription “Pioneer in the War against Drugs”—destroyed several years’ supply of the drug in Humen, near Canton, today’s Guangzhou. In China today, Lin is a national hero.

In 1839 Jardine appealed to the British Government to punish Lin’s act and to seek reparations for the loss, even though opium was illegal in China. This would be akin to Mexico demanding reparations from the United States for confiscating a drug shipment by a cartel. After Parliament narrowly approved—many MPs saw the drug trade for the crime that it was—Foreign Secretary Palmerston sent in the gunboats to defend the profits of the drug cartel, with effects that can be felt to this day. In contemporary Chinese accounts, this was the beginning of the century of humiliation that only came to an end with the establishment of the People’s Republic of China in 1949. The Opium War still stands in China as a symbol of the evils of capitalism and the perfidy of the West.

Jardine became a member of parliament, and was succeeded after his early death by Matheson, who went on to be a Fellow of the Royal Society and eventually, owner of the Isle of Lewis, where he found a new line in exports: helping Scots living on his estates to emigrate to Canada during the Highland Potato famine. By all accounts, Lord Matheson, as he had by then become, was a good deal more compassionate to his tenants than to his Chinese customers. Jardine Matheson is to this day a large and successful company. Jamsetjee Jeejeebhoy used his wealth from the opium trade to become a philanthropist, endowing hospitals in India, many of which still stand. For this work he was first knighted and then, in 1857, given a Baronetcy by Queen Victoria. In a perhaps unsurprising echo, Queen Victoria’s great-great-granddaughter, Queen Elizabeth, awarded knighthoods in the 1990s to Raymond and Mortimer Sackler, owners of Purdue Pharma, not for the human destruction they had wrought in the United States but for their philanthropy in Britain, much of which involved what was later called “art-washing.” Many institutions are still trying to extricate themselves from Sackler money, including, most recently, Oxford University in the UK and the U.S. National Academy of Sciences, Engineering, and Medicine who produced an “authoritative” report that, by several accounts, exaggerated the extent of pain in the United States, and thus the need for OxyContin.

The Sacklers, Jardine, Matheson, and Jeejeebhoy were all businessmen pursuing their own self-interest, just like Smith’s famous butcher, brewer, and baker, so why did the dealers do so much harm while, in Smith’s account, his tradesmen were serving the general well-being? Perhaps they were simply criminals who used their ill-gotten gains to corrupt the state—just what Smith had in mind when he described the laws that merchants clamored for as “like the laws of Draco,” in that they were “written in blood.” And misbehavior of the East India Company would have been no surprise to Smith. That is certainly part of the story, but I want to follow a different line of argument.

Smith’s notion of the “invisible hand,” the idea that self-interest and competition will often work to the general good, is what economists today call the first welfare theorem. Exactly what this general good is and exactly how it gets promoted have been central topics in economics ever since. The work of Gerard Debreu and Kenneth Arrow in the 1950s eventually provided a comprehensive analysis of Smith’s insight, including precise definitions of what sort of general good gets promoted, what, if any, are the limitations to that goodness, and what conditions must hold for the process to work.

I want to discuss two issues. First, there is the question of whose good we are talking about. The butcher, at least qua butcher, cares not at all about social justice; to her, money is money, and it doesn’t matter whose it is. The good that markets promote is the goodness of efficiency—the elimination of waste, in the sense that it is impossible to make anyone better off without hurting at least one other person. Certainly that is a good thing, but it is not the same thing as the goodness of justice. The theorem says nothing about poverty nor about the distribution of income. It is possible that the poor gain through markets—possibly by more than the rich, as was argued by Mises, Hayek, and others—but that is a different matter, requiring separate theoretical or empirical demonstration.

A second condition is good information: that people know about the meat, beer, and bread that they are buying, and that they understand what will happen when they consume it. Arrow understood that information is always imperfect, but that the imperfection is more of a problem in some markets than others: not so much in meat, beer, and bread, for example, but a crippling problem in the provision of health care. Patients must rely on physicians to tell them what they need in a way that is not true of the butcher, who does not expect to be obeyed when she tells you that, just to be sure you have enough, you should take home the carcass hanging in her shop. In the light of this fact, Arrow concluded that private markets should not be used to provide health care. “It is the general social consensus, clearly, that the laissez-faire solution for medicine is intolerable,” he wrote. This is (at least one of the) reason(s) why almost all wealthy countries do not rely on pure laissez-faire to provide health care.

So why is America different? And what does America’s failure to heed Arrow have to do with the Sacklers, or with deaths of despair, or with OxyContin and the pain and despair that it exploited and created? There are many reasons why America’s health care system is not like the Canadian or European systems, including, perhaps most importantly, the legacy of racial injustice. But today I want to focus on economics.

Neither America nor American economics was always committed to laissez-faire. In 1886, in his draft of the founding principles of the American Economic Association, Richard Ely wrote that “the doctrine of laissez-faire is unsafe in politics and unsound in morals.” The Association’s subscribers knew something about morals; in his 2021 book Religion and the Rise of Capitalism, Ben Friedman notes that 23 of its original 181 members were Protestant clergymen. The shift began with Chicago economics, and the strange transformation of Smith’s economics into Chicago economics (which, as the story of Stigler’s quip attests, was branded as Smithian economics). Liu’s book is a splendid history of how this happened.

As Angus Burgin has finely documented in The Great Persuasion: Reinventing Free Markets since the Depression (2015), Chicago economists did not argue that Arrow’s theorems were wrong, nor that markets could not fail—just that any attempt to address market failures would only make things worse. Worse still was the potential loss of freedom that, from a libertarian perspective, would follow from attempts to interfere with free markets.

Chicago economics is important. Many of us were brought up on a naïve economics in which market failures could be fixed by government action; indeed, that was one of government’s main functions. Chicago economists correctly argued that governments could fail, too. Stigler himself argued that regulation was often undone because regulators were captured by those they were supposed to be regulating. Monopolies, according to Milton Friedman, were usually temporary and were more likely to be competed away than reformed; markets were more likely to correct themselves than to be corrected by government regulators. James Buchanan argued that politicians, like consumers and producers, had interests of their own, so that the government cannot be assumed to act in the public interest and often does not. Friedman argued in favor of tax shelters because they put brakes on government expenditures. Inequality was not a problem in need of a solution. In fact, he argued, most inequality was just: the thrifty got rich and spendthrifts got poor, so redistribution through taxation penalized virtue and subsidized the spendthrift. Friedman believed that attempts to limit inequality of outcomes would stifle freedom, that “equality comes sharply into conflict with freedom; one must choose,” but, in the end, choosing equality would result in less of it. Free markets, on the other hand, would produce both freedom and equality. These ideas were often grounded more in hope than in reality, and history abounds with examples of the opposite: indeed, the early conflict between Jefferson and Hamilton concerned the former’s horror over speculation by unregulated bankers.

Gary Becker extended the range of Chicago economics beyond its traditional subject matter. He applied the standard apparatus of consumer choice to topics in health, sociology, law, and political science. On addiction, he argued that people dabbling in drugs recognize the dangers and will take them into account. If someone uses fentanyl, they know that subsequent use will generate less pleasure than earlier use, that in the end, it might be impossible to stop, and that their life might end in a hell of addiction. They know all this, but are rational, and so will only use if the net benefit is positive. There is no need to regulate, Becker believed: trying to stop drug use will only cause unnecessary harm and hurt those who are rationally consuming them.

Chicago analysis serves as an important corrective to the naïve view that the business of government is to correct market failures. But it all went too far, and morphed into a belief that government was entirely incapable of helping its citizens. In a recent podcast, economist Jim Heckman tells that when he was a young economist at Chicago, he wrote about the effects of civil rights laws in the 1960s on the wages of Black people in South Carolina. The resulting paper, published in 1989 in the American Economic Review, is the one of which Heckman is most proud. But his colleagues were appalled. Stigler and D. Gale Johnson, then chair of the economics department, simply would not have it. “Do you really believe that the government did good?” they asked him. This was not a topic that could possibly be subject to empirical inquiry; it had been established that the government could do nothing to help.

If the government could do nothing for African Americans, then it certainly could do nothing to improve the delivery of health care. Price controls were anathema because they would only undermine the provision of new lifesaving drugs and devices and would artificially limit provision, and this was true no matter what prices pharma demanded. If the share of national income devoted to health care were to expand inexorably, then that must be what consumers want, because markets work and health care is a commodity like any other. By the time of the pandemic, U.S. health care was absorbing almost a fifth of GDP, more than four times as much as military expenditure, and about three times as much as education. And just in case one might think that health care has anything to do with life expectancy, the OECD currently lists U.S. life expectancy as thirty-fourth out of the forty-nine countries that it tabulates. (That is lower than the figures for China and Costa Rica.)

I do not want to make the error of drawing a straight line from a body of thought to actual policy. In his General Theory (1936), Keynes famously wrote that “Practical men, who believe themselves to be quite exempt from any intellectual influences, are usually the slaves of some defunct economist. Madmen in authority, who hear voices in the air, are distilling their frenzy from some academic scribbler of a few years back.” He added “I am sure that the power of vested interests is vastly exaggerated compared with the gradual encroachment of ideas.” Keynes was wrong about the power of vested interests, at least in the United States, but he was surely right about the academic scribblers. Though the effect works slowly, and usually indirectly. Hayek understood this very well, writing in his Constitution of Liberty (1960) that the direct influence of a philosopher on current affairs may be negligible, but “when his ideas have become common property, through the work of historians and publicists, teachers and writers, and intellectuals generally, they effectively guide developments.”

Friedman was an astonishingly effective rhetorician, perhaps only ever equaled among economists by Keynes. Politicians today, especially on the right, constantly extol the power of markets. “Americans have choices,” declared Former Utah congressman Jason Chaffetz in 2017. “Perhaps, instead of getting that new iPhone that they just love, maybe they should invest in their own health care. They’ve got to make those decisions themselves.” Texas Republican Jeb Hensarling, who chaired the House Financial Services Committee from 2013 to 2019, became a politician to “further the cause of the free market” because “free-market economics provided the maximum good to the maximum number.” Hensarling studied economics with once professor and later U.S. Senator Phil Gramm, another passionate and effective advocate for markets. My guess is that most Americans, and even many economists, falsely believe that using prices to correct an imbalance between supply and demand is not just good policy but is guaranteed to make everyone better off. The belief in markets, and the lack of concern about distribution, runs very deep.

And there are worse defunct scribblers than Friedman. Republican ex-speaker Paul Ryan and ex-Federal Reserve chair Alan Greenspan are devotees of Ayn Rand, who despised altruism and celebrated greed, who believed that, as was carefully not claimed by Hayek and other more serious philosophers—the rich deserved their wealth and the poor deserved their misery. Andrew Koppelman, in his 2022 study of libertarianism, Burning Down the House, has argued that Rand’s influence has been much larger than commonly recognized. Her message that markets are not only efficient and productive but also ethically justified is a terrible poison—which does not prevent this message from being widely believed.

The Chicago School’s libertarian message of non-regulation was catnip to rich businessmen who enthusiastically funded its propagation. They could oppose taxation in the name of freedom: the perfect cover for crony capitalists, rent seekers, polluters, and climate deniers. Government-subsidized health care as well as public transport and infrastructure were all attacks on liberty. Successful entrepreneurs founded pro-market think tanks whose conferences and writings amplified the ideas. Schools for judges were (and are) held in luxury resorts to help educate the bench in economic thinking; the schools had no overt political bias, and several distinguished economists taught in them. The (likely correct) belief of the sponsors is that understanding markets will make judges more sympathetic to business interests and will purge any “unprofessionalism” about fairness. Judge Richard Posner, another important figure in Chicago economics, believed that efficiency was just, automatically so—an idea that has spread among the American judiciary. In 1959 Stigler wrote that “the professional study of economics makes one politically conservative.” He seems to have been right, at least in America.

There is no area of the economy that has been more seriously damaged by libertarian beliefs than health care. While the government provides health care to the elderly and the poor, and while Obamacare provides subsidies to help pay for insurance, those policies were enacted by buying off the industry and by giving up any chance of price control. In Britain, when Nye Bevan negotiated the establishment of the National Health Service in 1948, he dealt with providers by “[stuffing] their mouths with gold”—but just once. Americans, on the other hand, pay the ransom year upon year. Arrow had lost the battle against market provision, and the intolerable became the reality. For many Americans, reality became intolerable.

Prices of medical goods and services are often twice or more the prices in other countries, and the system makes heavy use of procedures that are better at improving profits than improving health. It is supported by an army of lobbyists—about five for every member of Congress, three of them representing pharma alone. Its main regulator is the FDA, and while I do not believe that the FDA has been captured, the industry and the FDA have a cozy relationship which does nothing to rein in profits. Pharma companies not only charge more in the United States, but, like other tech companies, they transfer their patents and profits to low-tax jurisdictions. I doubt that Smith would argue that the high cost of drugs in the United States, like the cost of apothecaries in his own time, could be attributed to the delicate nature of their work, the trust in which they are held, or that they are the sole physicians to the poor.

When a fifth of GDP is spent on health care, much else is foregone. Even before the pandemic ballooned expenditures, the threat was clear. In his 2013 book on the 2008 financial crisis, After the Music Stopped, Alan Blinder wrote, “If we can somehow solve the health care cost problem, we will also solve the long-run deficit problem. But if we can’t control health care costs, the long run budget problem is insoluble.” All of this has dire effects on politics, not just on the economy. Case and I have argued that while out-of-control health care costs are hurting us all, they are wrecking the low-skill labor market and exacerbating the disruptions that are coming from globalization, automation, and deindustrialization. Most working-age Americans get health insurance through their employers. The premiums are much the same for low-paid as for high-paid workers, and so are a much larger share of the wage costs for less-educated workers. Firms have large incentives to get rid of unskilled employees, replacing them with outsourced labor, domestic or global, or with robots. Few large corporations now offer good jobs for less-skilled workers. We see this labor market disaster as one of the most powerful of the forces amplifying deaths of despair among working class Americans—certainly not the only one, but one of the most important.

Scotland shows that you do not need an out-of-control health care sector to produce drug overdoses—that deindustrialization and community destruction are important here just as they are in the United States. But Scotland seems to have skipped the middle stages, going straight from deindustrialization and distress to an illegal drug epidemic. As with less-educated Americans, some Scots point to a failure of democracy: of people being ruled by politicians who are not like them, and whom they neither like nor voted for.

The inheritors of the Chicago tradition are alive and well and have brought familiar arguments to the thinking about deaths of despair. As has often been true of libertarian arguments, social problems are largely blamed on the actions of the state. Casey Mulligan, an economist on Trump’s Council of Economic Advisors, argued that by preventing people from drinking in bars and requiring them to drink cheaper store-bought alcohol at home, COVID-19 public health lockdowns were responsible for the explosion of mortality from alcoholic liver disease during the pandemic. Others have argued that drug overdoses were exacerbated by Medicaid drug subsidies, inducing a new kind of “dependence” on the government. In 2018 U.S. Senator Ron Johnson of Wisconsin issued a report, Drugs for Dollars: How Medicaid Helps Fuel the Opioid Epidemic. According to these accounts, the government is powerless to help its citizens—and not only is it capable of hurting them, but it regularly and inevitably does so.

I do not want to claim that health care is the only industry that we should worry about. Maha Rafi Atal is writing about Amazon and how, like the East India Company, it has abrogated to itself many of the powers of government, especially local government, and that, as Smith predicted, government by merchants—government in the aid of greed—is bad government. Health care is not a single entity, unlike Amazon, but like Amazon, its power in Washington is deeply troubling. There are serious anxieties over the behavior of other tech companies too. Banks did immense harm in the financial crisis. Today legalized addiction to gambling, to phones, to video games, and to social media has become a tool of corporate profit making, just as OxyContin was a tool of profit making for the Sacklers. After many years of stability, the share of profits in national income is rising. People who should be full citizens in a participatory democracy can often feel more like sheep waiting to be sheared.

Of course, there have always been mainstream economists who were not libertarians, perhaps even a majority: those who worked for Democratic administrations, for instance, and who did not subscribe to all Chicago doctrines. But there is no doubt that the belief in markets has become more widely accepted on the left as well as on the right. Indeed, it would be a mistake to lay blame on Chicago economics alone and to absolve the rest of an economics profession that was all too eager to adopt its ideas. Economists have become famous (or infamous) for their sometimes-comic focus on efficiency, and on the role of markets in promoting it. And they have come to think of well-being as individualistic, independent of the relationships with others that sustain us all. In 2006, after Friedman’s death, it was Larry Summers who wrote that “any honest Democrat will admit that we are all now Friedmanites.” He went on to praise Friedman’s achievements in persuading the nation to adopt an all-volunteer military and to recognize the benefits of “modern financial markets”—all this less than two years before the 2008 collapse of Lehman Brothers. The all-volunteer military is another bad policy whose consequences could end up being even worse. It lowers the costs of war to the decision-making elites whose children rarely serve, and it runs the risk of spreading pro-Trump populism by recruiting enlisted men and women from the areas and educational groups among which such support is already strong.

The beliefs in market efficiency and the idea that well-being can be measured in money have become second nature to much of the economics profession. Yet it does not have to be this way. Economists working in Britain—Amartya Sen, James Mirrlees, and Anthony Atkinson—pursued a broader program, worrying about poverty and inequality and considering health as a key component of well-being. Sen argues that a key misstep was made not by Friedman but by Hayek’s colleague Lionel Robbins, whose definition of economics as the study of allocating scarce resources among competing ends narrowed the subject compared with what philosopher Hilary Putnam calls the “reasoned and humane evaluation of social wellbeing that Adam Smith saw as essential to the task of the economist.” And it was not just Smith, but his successors, too, who were philosophers as well as economists.

Sen contrasts Robbins’s definition with that of Arthur Cecil Pigou, who wrote, “It is not wonder, but rather the social enthusiasm which revolts from the sordidness of mean streets and the joylessness of withered lives, that is the beginning of economic science.” Economics should be about understanding the reasons for, and doing away with, the world’s sordidness and joylessness. It should be about understanding the political, economic, and social failures behind deaths of despair. But that is not how it worked out in the United States.

We’re interested in what you think. Submit a letter to the editors at letters@bostonreview.net. Boston Review is nonprofit, paywall-free, and reader-funded. To support work like this, please donate here.