Executive Summary

This report presents original findings from the most comprehensive study of the accuracy of public perception with respect to the prevalence and racial distribution of police use of force.

The report is divided into four main parts:

- Part 1 outlines the study’s theoretical framework and expectations regarding the accuracy of public estimates of police use of force. In brief, it argues that because of a lack of direct personal experience and information for assessing the prevalence of police force and racial bias, Americans must rely on media coverage to estimate how serious police violence is. This renders public estimates susceptible to media distortion; and this perceptual distortion is further exacerbated by individuals’ political orientations and biases.

- Part 2 presents the first empirical analysis of new data from our original survey, which asks U.S. respondents to estimate various police use-of-force statistics. The findings reveal significant overestimates of the prevalence of nonlethal use-of-force incidents—both in general and as to those involving black Americans. Respondents also significantly overestimated both the black and unarmed shares of fatal police-shooting victims. These overestimates tend to be largest among liberal respondents.

- Part 3 introduces the study’s embedded experimental design, which tests whether correcting survey respondents’ misestimates leads respondents to adjust their perceptions of police brutality and related policy preferences. Encouragingly, the overall findings indicate that receiving correct information significantly reduced inaccurate perceptions of police brutality and racism and increased support for policing-centered, anticrime public policies. These effects were also greatest for liberals and respondents who gave moderate-to-large overestimates, on average.

- Part 4 discusses the implications of the study’s findings for news media coverage of police use of force. It proposes recommendations for journalists and argues for the aggressive and frequent use of social media context and fact-checking tools as a means of combating public misperceptions of policing.

Introduction

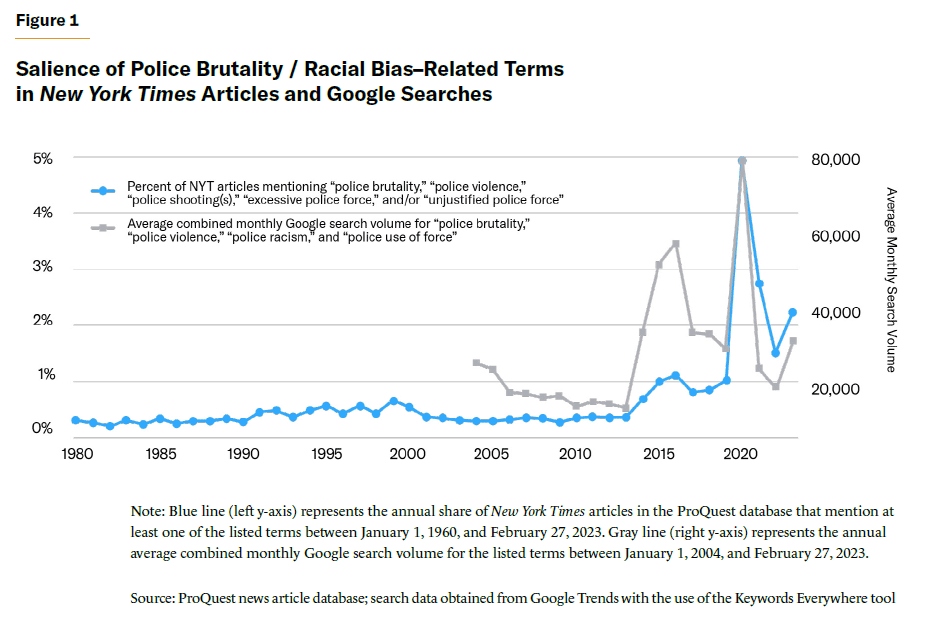

Recently, there has been a dramatic increase in media and public attention to police brutality and racial bias. By some measures, the volume of media references to these topics has been greater over the past decade than ever before.[1] Google search behavior shows that Americans are consuming this messaging (Figure 1), and their attitudes toward police—particularly Democrats’ and liberals’ attitudes—have responded accordingly.[2] Confidence in police has never been lower,[3] while antipolice sentiment,[4] perceptions of police brutality[5] and racism,[6] and support for defunding the police have never been higher.[7]

So much have perceptions of racist policing grown that, as of 2021, more than half (52%) of Democrats felt that levels of racism were greater among police officers than other societal groups (up from 35% in 2014). Fears of the police among black Americans[8] have increased to the point that, in 2020, roughly 74% of black respondents to a Quinnipiac University poll said that they “personally worry” about being the victim of police brutality, compared with 64% and 57% who said so in 2018 and 2016, respectively.

Yet these trends in media coverage and public perceptions seem divorced from empirical reality. A stark illustration of this was provided by a nationally representative survey conducted in 2019 by the Skeptic Research Center,[9] which found that nearly 33% of people—including 44% of liberals—thought that 1,000 or more unarmed black men alone were killed by police in 2019. In fact, according to the Mapping Police Violence (MPV) database, 29 unarmed black (vs. 44 white) men were killed by police that year.[10] Further, whereas the average Skeptic Research Center respondent thought that 48% of all people killed by police that year were black—an estimate that reached a high of 55% among liberal respondents—the true proportion (25%) was far lower.[11]

Meanwhile, an earlier Qualtrics survey conducted by Manhattan Institute fellow Eric Kaufmann found that 80% of black respondents and 60% of highly educated white liberal respondents thought that young black men were more likely to be fatally shot by police than to die in a car accident.[12] Actually, in 2020, the year the survey was fielded, black men between the ages of 18 and 34 were more than 17 times more likely to die in motor-vehicle accidents (38.4 deaths per 100,000) than to be shot to death by police (2.2 per 100,000).[13]

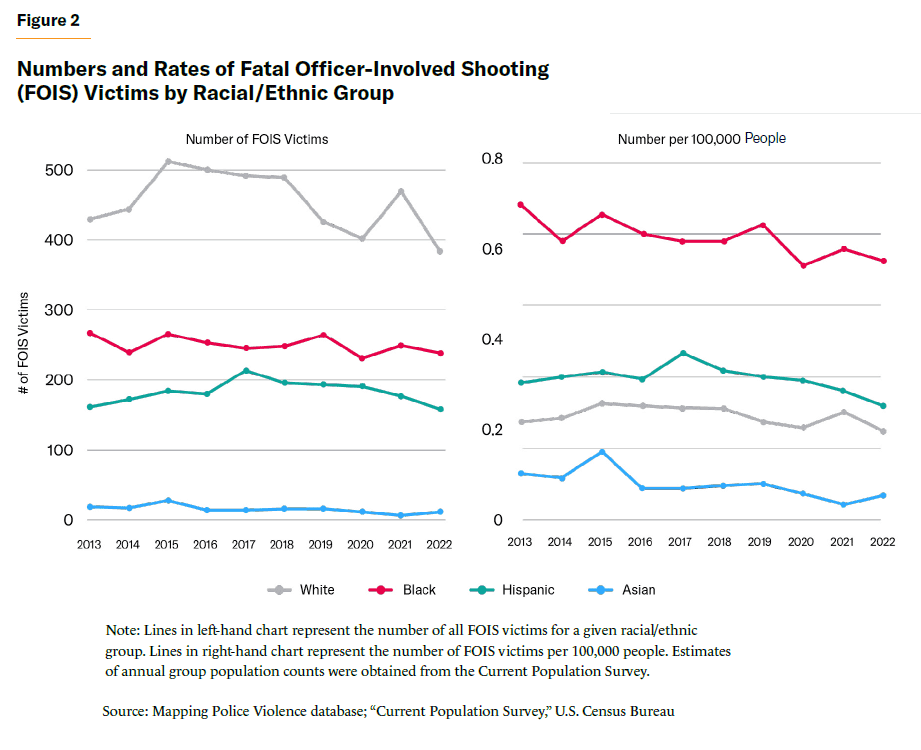

More generally, and despite 45% of the public (including 67% of Democrats) thinking that police violence against the public is an “extremely” or a “very serious” problem, instances of police use of force remain exceedingly rare.[14] What data can be gathered[15] show that rates of fatal officer-involved shootings (FOIS) in major U.S. cities are generally lower in the past 10 years than in decades past.

Data from the MPV database (Figure 2) further reveal that numbers and rates of FOIS among the country’s four largest racial/ethnic groups have either declined or remained stable across the 2013–22 period.

Rather than a response to actual increases in use of force, the swing of public opinion against the police appears to be a largely media-driven phenomenon—one apparently facilitated by the rapid adoption of smartphones and social media and the rise of the Black Lives Matter movement.

This report considers whether the unprecedented media attention given to police use of force has distorted public perceptions of its frequency and racial distribution, thereby making the phenomenon appear more pervasive and severe than is justified by the data.

It addresses three main questions:

- First, how accurate are people’s estimates of police use-of-force statistics? Though findings from the aforementioned Skeptic survey—one of the only existing surveys to address this question—suggest that they tend to be highly inaccurate, this report provides a more thorough investigation into the matter.

- Second, do such (mis)estimates directly inform people’s assessment of the severity of police brutality and racism, as well as their policing policy preferences? While intuitively that may be the case, it’s also plausible that overestimates of police brutality and racism are the result of political or social identities, reflecting or reinforcing preexisting views rather than independently guiding perceptions and preferences.

- Third, do people adjust their perceptions of police brutality and associated policy preferences when their misestimates are corrected?

These questions are important because, inadvertently or otherwise, news organizations, journalists, and political elites may be contributing to misperceptions about police use of force—misperceptions that could have, and likely have had, significant social costs.[16]

For instance, negative media portrayals of police and the public outrage that those portrayals foster can cause police officers to engage in less proactive policing, which, in turn, can lead to increases in crime.[17] Negative media portrayals can also weaken the morale of police officers and decrease the desirability of the profession, making it harder for police departments to retain and recruit quality personnel and forcing them to lower hiring standards[18] to fill staffing vacancies.[19]

The erosion of the police’s public legitimacy can also sway or pressure local governments to consider or enact reckless de-policing policies[20] that compromise public safety—as was the case following the murder of George Floyd.[21] It could also motivate greater lawbreaking, as people are less likely to comply with the law if they perceive that the police routinely flout it.[22] In addition, misperceptions of police use of force can lead to unwarranted fears of being victimized by police, discouraging people from cooperating with the police or reporting crimes and suspicious activities.[23]

Insofar as these negative consequences are rooted in misperceptions of the prevalence and severity of police violence, this report brings good news. Since misperceptions can be corrected, this report suggests that at least some of these negative consequences could be mitigated, if not avoided, if news organizations and political leaders covered or discussed policing incidents more responsibly.

Part 1: Literature Review and Theoretical Expectations

The Role of News Media in Public (Mis)perceptions of “Police Violence”

People often lack direct experience with the social phenomena that they are asked to assess in public opinion surveys. This is likely especially the case with respect to police use of force, which few Americans will ever directly observe or encounter. Therefore, when asked to appraise the importance, risk, or severity of a given phenomenon, the responses that people give are typically based on whatever information is at the top of their minds at that moment—or what is most convenient for their social identities and political orientations.[24]

People rely on news media as a source of informational cues, and media coverage is often the only window available through which the public can learn about phenomena outside their direct experience.[25] It is also the primary channel through which political leaders communicate to the public, which further helps people determine or become aware of an issue’s relative importance.[26] Thus, the more attention that news media and political leaders give to an issue, the more the public is likely to regard it as important. Additionally, the more the media reports on a given phenomenon, the easier it is for people to retrieve examples of that phenomenon from memory. This easy retrieval, in turn, is a cue to the phenomenon’s broader societal prevalence.

In the context of race, the availability of informational cues may explain why Americans tend to perceive greater discrimination against blacks and worse race relations in the U.S. at large than in their own communities—i.e., their appraisals of local events are less reliant on news media and elite messaging and are more informed by what they see around them.[27] When it comes to appraising the prevalence and severity of racism and race relations more broadly, however, most people have little choice but to rely on news media and political leaders. Therefore, it’s likely no coincidence that as media and political attention to racial discrimination increases, so, too, do public perceptions of its severity.[28]

Expectations for Public Estimates of Police Use of Force

As with racial discrimination, increases in public perceptions of the seriousness and prevalence of police brutality closely track increases in media coverage of that issue. To the extent that these perceptions relate to or influence people’s estimates of the prevalence of police force against the public, this study expects that the average respondent will significantly overestimate:

- The percentage of Americans subjected to nonlethal force at the hands of the police in a typical year

- The number of Americans shot and killed by police in a typical year

- The share of unarmed Americans shot and killed by police in a typical year.

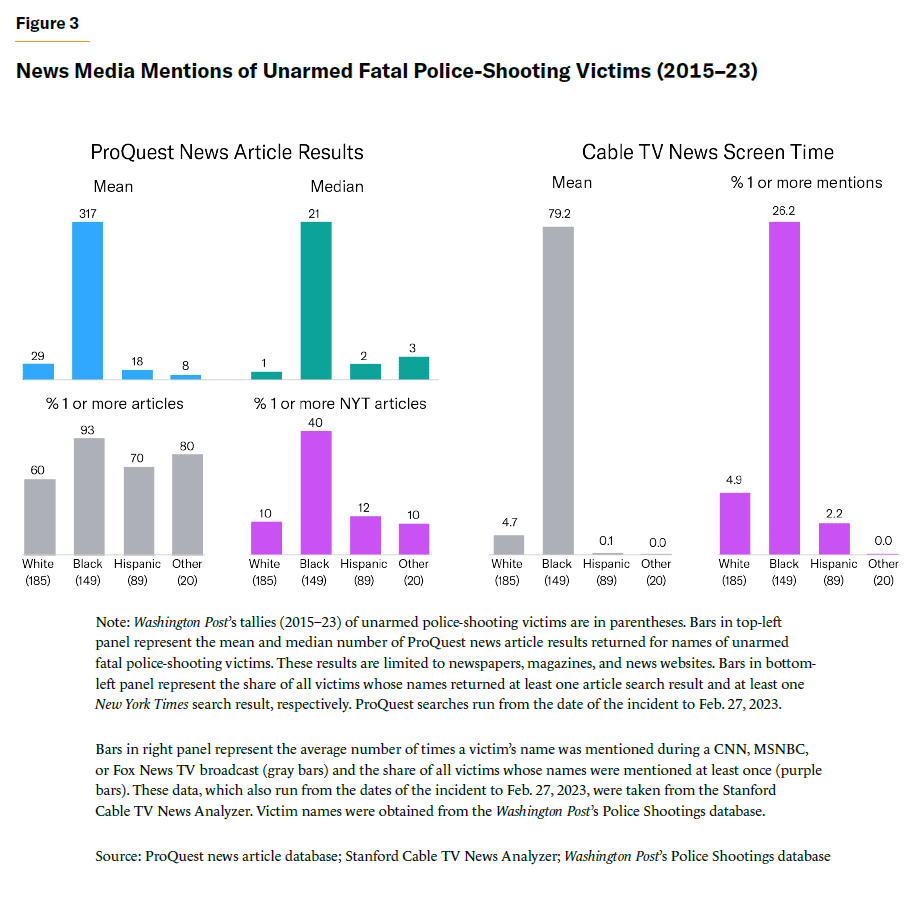

However, the media and political messaging on police brutality that the public receives are hardly race-neutral. Data from the ProQuest news article database (Figure 3) show that the average and median unarmed black (fatal) police-shooting victim returns nearly 11 and 21 times the number of news articles, respectively, as the average and median unarmed white (fatal) victim.

Black victims were also four times more likely than white victims to be featured in the New York Times. These disparities are not confined to printed news coverage. As shown in the right panel of Figure 3, black victims were more than five times more likely than white victims to be mentioned at least once during an MSNBC, CNN, or Fox News broadcast.

This indicates that media messaging on police brutality is overwhelmingly focused on black victims, despite blacks constituting just a quarter (if a disproportionate one) of those killed by the police in a typical year.[29] This likely explains why the average respondent in the Skeptic survey estimated that 48% of those killed by police in 2019 were black. When Americans are asked to provide such estimates, the cases that spring to mind likely consist largely, if not entirely, of black faces.

Accordingly, when respondents are additionally asked to give estimates for blacks and whites, this study predicts that:

- Respondents’ overestimation of the percentage of Americans who reported being subjected to nonlethal force in a typical year will be significantly larger when the Americans are identified as black than when identified as white

- They will significantly overestimate the black share of fatal police-shooting victims in a typical year and underestimate the white share

- Their overestimates of the unarmed share of fatal policing-shooting victims will be significantly larger when the victims are identified as black than when they are identified as white.

Political Orientation’s Influence on Estimation Accuracy

So far, all the theoretical predictions in this study have been blind to the respondents’ political orientations. This is because people’s estimates should basically be shaped by the same information environment. In other words, liberals and conservatives are all expected to overestimate the criteria in question, on average.

However, it’s also reasonable to expect that the magnitude of overestimates will be larger among liberals than conservatives. Not only are perceptions of pervasive police brutality and racism more congenial[30] to the moral-ideological orientations of liberals than conservatives, but they are also likely to be more interested in and attentive to (or at least less avoidant of) such information.[31] Also, the political (or Democratic Party) leaders and elites liberals follow are more likely to communicate it.[32]

What little data are available also suggest that liberals do indeed have less accurate perceptions with respect to police use of force than conservatives. As noted earlier, the Skeptic survey found that liberals overestimated both the number of unarmed black men killed by police and the black share of police homicides in 2019 to a greater degree than conservatives.

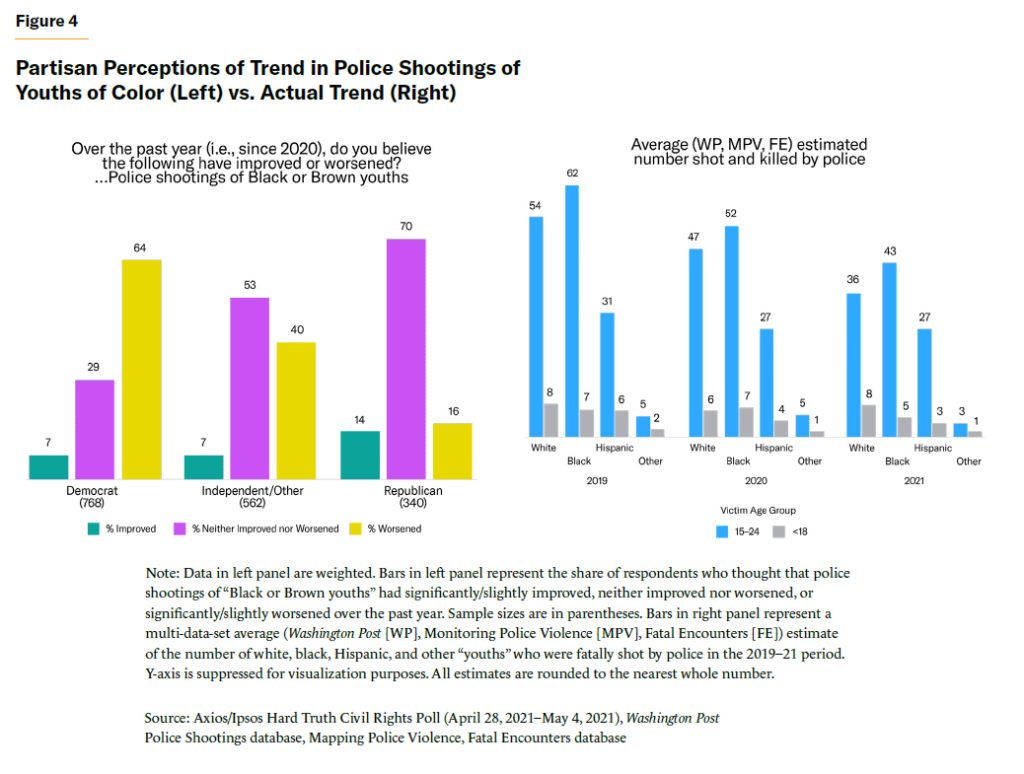

Additionally, while ideological identification was not measured, data from a 2021 Axios/Ipsos survey (Figure 4) show that Democrats were four times more likely than Republicans to believe that police shootings of “Black or Brown youths” had gotten worse over the previous year.[33]

In fact, and regardless of the data source or definition of “youth” adopted, there were no such increases in the number of FOIS victims among these demographics (Figure 4, right panel) in 2019–21. To the contrary, fatal police shootings in this demographic either declined or remained roughly steady.

This context informs this study’s expectation that liberals will give larger overestimates of all nonlethal and lethal use-of-force criteria—especially those for black victims—than conservatives. Further, while conservatives are expected to give estimates that are closer to the actual number, on average, we expect rates of underestimation to be higher among them than among Democrats and liberals.

Do Perceptions Respond to New, Correct Information?

When people are provided with facts or correct information about a social condition or phenomena, do they update their perceptions accordingly? This is a critical question for the current study. If people cling to their perceptions of police brutality and racism despite being presented with evidence to the contrary, it would indicate that these perceptions are primarily driven by political or social motivations, as opposed to being a product of ignorance, media bias, and general information heuristics.

However, an emerging consensus from experimental research is that people do “typically update their beliefs in the direction of the evidence they receive.”[34] This has been evident in studies examining the accuracy of people’s perceptions of crime trends,[35] the degree of racial inequality and discrimination,[36] and the characteristics and economic contributions of immigrants to the United States.[37] Such findings suggest that that while social and political motivations do bear on individuals’ beliefs, there is also an inherent motivation to hold accurate beliefs about the world, leading to a willingness to revise their beliefs and perceptions when presented with sufficient evidence.[38]

Because this study expects all respondents to initially overestimate the prevalence of police use of force and the proportion of black American victims, I predict that respondents who are subsequently shown official estimates of these statistics (i.e., the treatment) will perceive police brutality and racism to be rarer and less of a problem than those who are not shown official estimates.

However, some respondents will inevitably have larger overestimates than others, some will be roughly accurate, and some will even underestimate. It is wrong to expect the treatment to have the same effects on all misestimates. In other words, the more a respondent overestimated the official use-of-force statistics, the more that respondent will, after treatment, perceive less police brutality and racism relative to the untreated.

If individuals’ policing-related policy preferences are at least partly based on their perceptions of the prevalence and severity of police brutality and racism, it follows that respondents who receive corrective information (i.e., the “treatment” group) should update not only their perceptions but also their policy preferences.

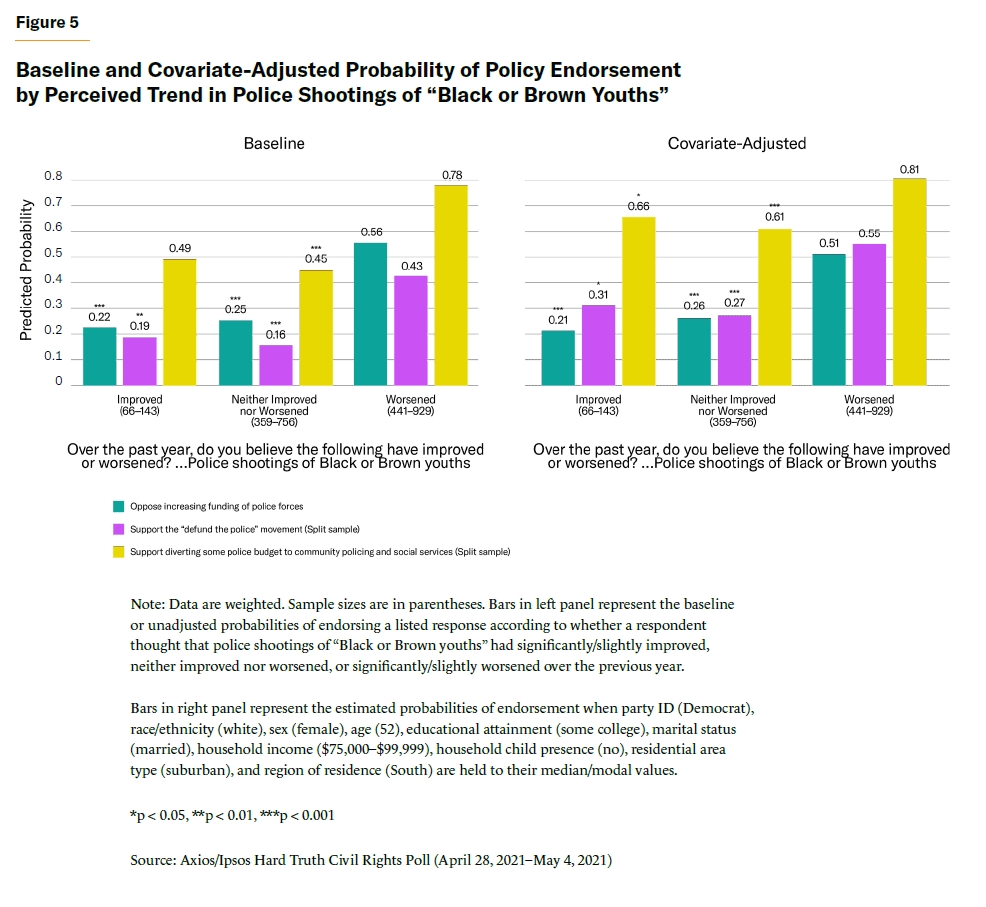

Political science studies that compare the policy preferences of “informed” or accurate respondents with the uninformed or inaccurate generally offer strong conceptual support for this assumption.[39] An example of this kind of analysis is shown in Figure 5, which compares the policing-related policy attitudes of those who thought that police shootings of “Black or Brown youths” had improved, neither improved nor worsened, or worsened over the previous year. Even when accounting for party identification, race, and other demographic/background variables, those who gave the (incorrect) response of “worsened” are 25–30 points more likely to oppose increasing police funding, 24–28 points more likely to support the “defund the police” movement, and 15–20 points more likely to support diverting police budgets to community policing and social services, compared with those who gave an “improved” or “neither improved nor worsened” response.

Of course, cross-sectional analyses of this sort hardly prove that policy preferences are causally downstream from perceptions. Both perceptions and preferences could stem from other variables whose influences are not captured in the model, such as ideology and political or social identity. Findings from experimental research are thus generally more probative. Esberg, Mummolo, and Westwood note that, with rare exceptions, they generally show that “correcting mistaken beliefs about seemingly relevant facts does little to alter related policy preferences.”[40]

Most relevant to this study is a recent experiment by Schiff et al. that tested whether providing information about the number of fatal police shootings in respondents’ cities influenced their support for varying police reforms. These authors concluded that this informational intervention had no significant effects on support for reforms—neither overall nor as a function of the magnitude and direction of misestimates—that were most strongly associated with partisanship.[41]

Other studies have similarly concluded that though receiving information can meaningfully affect perceptions, this effect does not carry over to policy preferences.[42] On the other hand, some earlier and more recent studies do show[43] that people adjust their policy preferences in the face of belief-discordant evidence.[44]

This Study’s Prediction: Policy Preferences Can Change

Given this conflicting evidence, predictions for the current study are not straightforward. But on the assumption that (mis)estimates of police use-of-force statistics do causally influence policing policy attitudes, respondents can be expected to update the latter when presented with estimation-corrective information.

Specifically, relative to the uncorrected respondents (i.e., the control group), estimation-corrected respondents who generally overestimated (underestimated) the official use-of-force statistics will express greater support for (opposition to) traditional policing-centered anticrime measures. The effects of corrective information on policy attitudes are also expected to grow as the magnitude of over- and underestimates increases. Finally, if corrective information shifts policy attitudes by shifting general perceptions of the problem and severity of police brutality and racism, shifts in perceptions are expected to at least partially mediate shifts in policy attitudes.

Part 2: The Current Study’s Results and Analysis

Recruiting a Closely Representative Sample

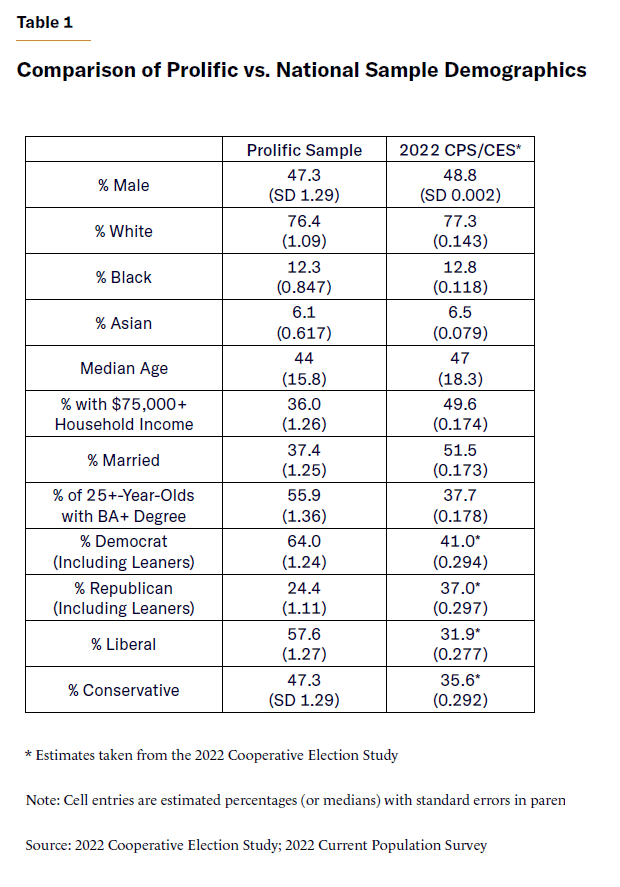

To test the predictions outlined in this report’s previous section, all of which were preregistered via the Open Science Framework (OSF) portal,[45] I recruited a sample of 1,508 U.S. adult (18+) respondents via the crowdsourcing platform Prolific. Recruitment began on February 13 and concluded on February 16, 2023. Although not a random sample of the U.S. population, respondents were recruited using cross-stratified demographic quotas[46] to produce a sample that approximates the broader U.S. population in terms of age, sex, and ethnicity.

This similarity is confirmed in Table 1, which compares the demographic composition of recruited Prolific respondents with adult (18+) respondents from the 2022 Current Population Survey and the 2022 Cooperative Election Study. While Prolific adults are somewhat younger than adults in the broader population (median age=44 vs. 47), the sex and ethnicity distributions of the former closely match the latter.

For other variables, though, the correspondence is (unsurprisingly)[47] much weaker. Specifically, Prolific adult respondents report lower household incomes, are less likely to be married (37.4% vs. 51.5%), are more likely to have BA degrees (55.9% vs. 37.7%), and are far more likely to identify as Democrats (64% vs. 41%) and liberals (57.6% vs. 31.9%) than adults in the broader population. Consequently, Prolific sample means and variances for certain outcome variables are likely to meaningfully differ from those observed in the general population.

Nonetheless, research indicates that relationships and treatment effects observed in convenience and less representative samples do typically replicate in nationally representative samples.[48] Therefore, we can be confident that the substantive results of the current study will generalize to the broader U.S. adult population.

Estimating and Analyzing Police–Public Interaction

Estimates of Nonlethal Force

To find respondents’ perceptions on police use of force, the first estimation questions begin by informing respondents about the 2018[49] Police–Public Contact[50] (PPC) survey, which the U.S. Bureau of Justice Statistics (BJS) “conducted on large nationally representative sample of 139,692 U.S. respondents ages 16 and older to measure and better understand the public’s interactions with police over the previous 12 months.”[51]

Respondents were then separately[52] asked to estimate, using their “best guess and without looking up the answer,”[53] the percentage of all, white, and black PPC respondents who reported being stopped or approached by police over the previous 12 months. Then they were separately asked to estimate the shares of these respondents who subsequently reported being subjected to nonlethal force, which was defined for them (and by BJS) as kicking, hitting, pushing, grabbing, tasering, pepper-spraying, handcuffing, or gun-pointing.

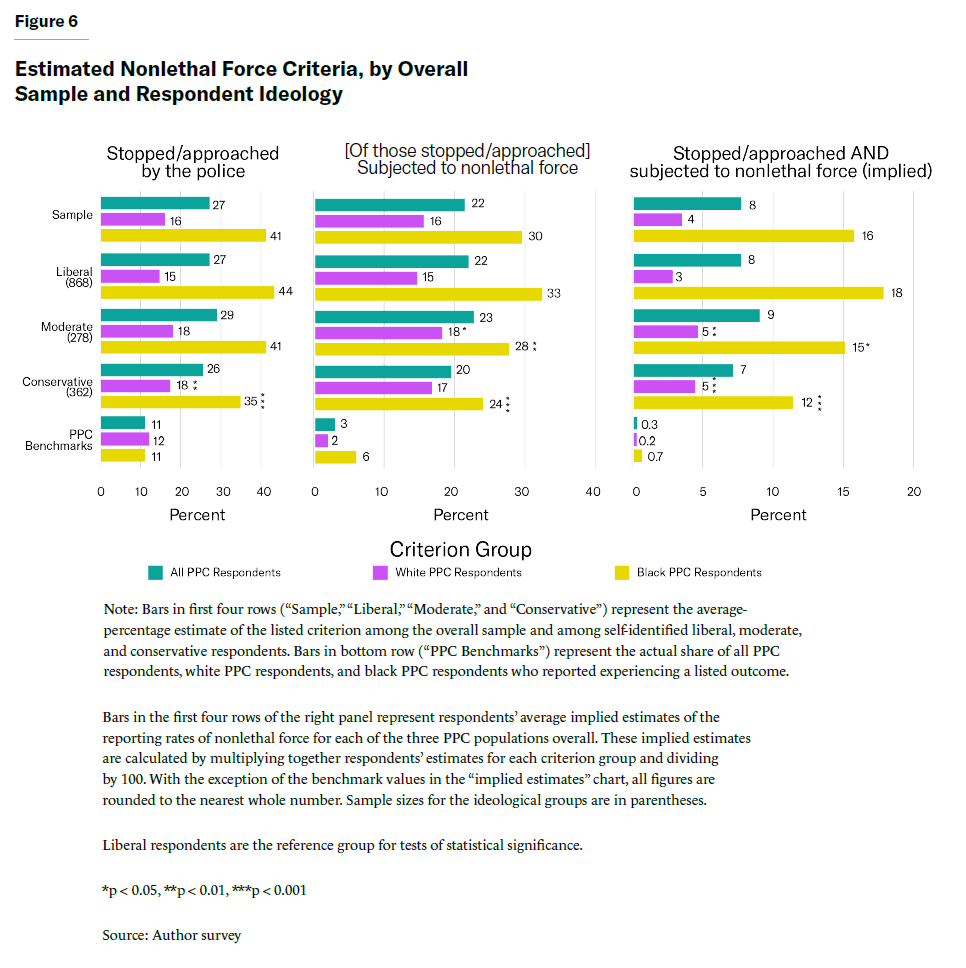

Figure 6 charts the average estimates for the overall sample and for the three ideological groups. Bars in the final rows of each column report the benchmark estimates (i.e., the “correct” answers) from PPC.

Beginning with the left panel, we see that most respondents significantly overestimated the share of PPC respondents who reported being stopped/approached by police over the previous year. On average, respondents believed that such interactions were reported 2.5 times more frequently than they actually were, a pattern that was consistent across ideological groups.

When considering race-specific estimates of reported police-initiated contact, the Prolific survey adults’ estimates for white PPC respondents tended to be closer to the mark, while those for black PPC respondents were considerably less accurate. Specifically, respondents overestimated the white reporting rate by 4 points and overestimated the black rate by 30 points. While this pattern was evident across political ideologies, it was most pronounced among liberals, who overestimated the black rate by an additional 9 points, compared with conservatives.

A very similar pattern of overestimation is observed in estimates of the prevalence of nonlethal force (middle panel). While the actual report of such force among those stopped or approached by the police in the 2018 PPC survey was just 3%, the average respondent’s estimate was more than seven times higher. Estimates for black PPC respondents were, again, even less accurate in absolute terms. Whereas 6% of blacks who reported police-initiated contact reported being subjected to nonlethal force, the average respondent estimated that 30% did so, with liberal respondents again overestimating more than conservatives.

Taking both these and the earlier police-initiated contact estimates into account, the average respondent’s (implicit)[54] estimate of the share of all blacks who reported experiencing nonlethal force (right panel) was nearly 23 times—and those of liberals and conservatives 26 and 17 times—the actual rate reported in the PPC survey.

In conclusion, respondents[55] of all political backgrounds significantly overestimated the prevalence of police use of nonlethal force. This error, however, tended to be larger for estimates of force reported by black Americans and larger still for liberal than for conservative respondents.

Estimates of Fatal Officer-Involved Shootings (FOIS)

A second estimation series was designed to measure the accuracy of respondents’ perceptions of FOIS,[56] including their distribution across racial groups. The survey begins by informing respondents that, according “to data from the U.S. Census Bureau, the U.S. population averaged 326.5 million people between 2015 and 2022.”[57] This was done to ensure that respondents were not ignorant of the U.S. population size and that estimates have the same meaning[58] for all respondents.

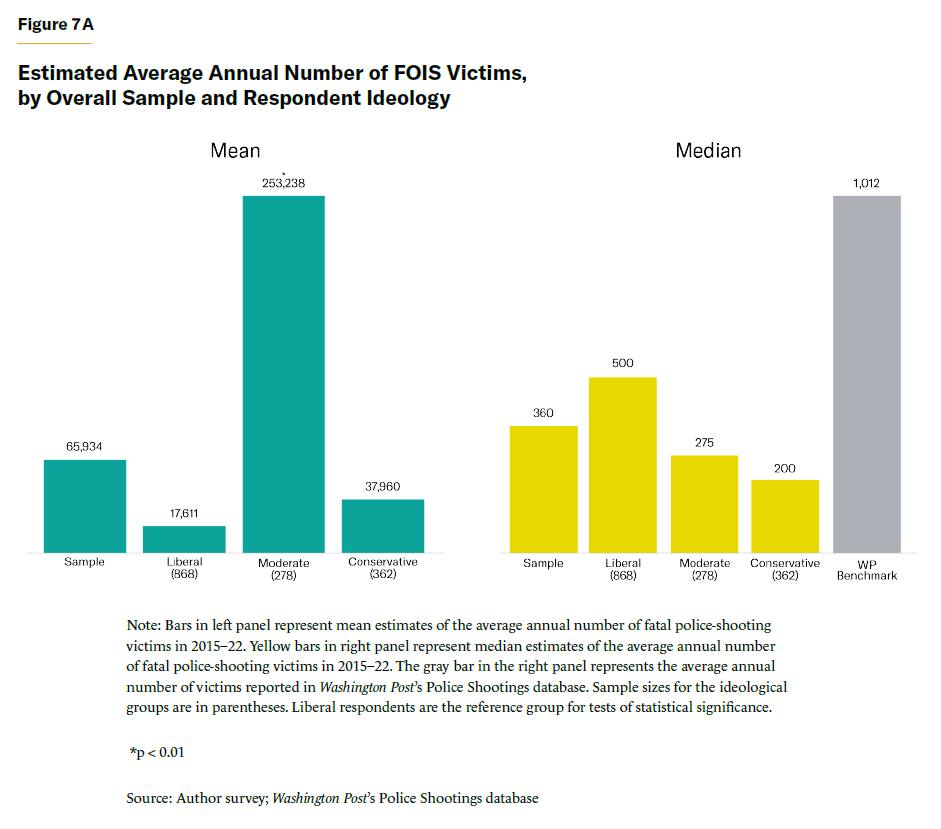

After again reminding them to use their “best guess and without looking up the answer,” respondents were asked to estimate the number of people “shot and killed by police in the U.S. each year on average between 2015 and 2022.”[59] To benchmark these estimates, I use the average annual number of fatal shooting victims (1,012) reported in the Washington Post’s (WP) Police Shootings database. Average and median estimates for the sample overall and for each of the three ideological groups are presented in Figure 7A.

Earlier, it was predicted that respondents, in general, would overestimate the number of fatal police-shooting victims, with liberals doing so to a greater degree than conservatives. However, the data do not support these predictions.

Although the average respondent estimate (65,934 deaths) vastly exceeded the Washington Post benchmark (1,012), this is largely due to respondents with extreme overestimates, which were extremely high outliers.[60] Therefore, the median, which is less influenced by such outliers, offers a more accurate account of respondents’ estimation tendencies. Quite unexpectedly, the median estimate (360 FOIS victims) falls well short of the benchmark number. Additionally, while the median estimate among liberals (500) was higher than that of conservatives (200), it was also significantly closer to the Washington Post benchmark, making it the more accurate of the two.

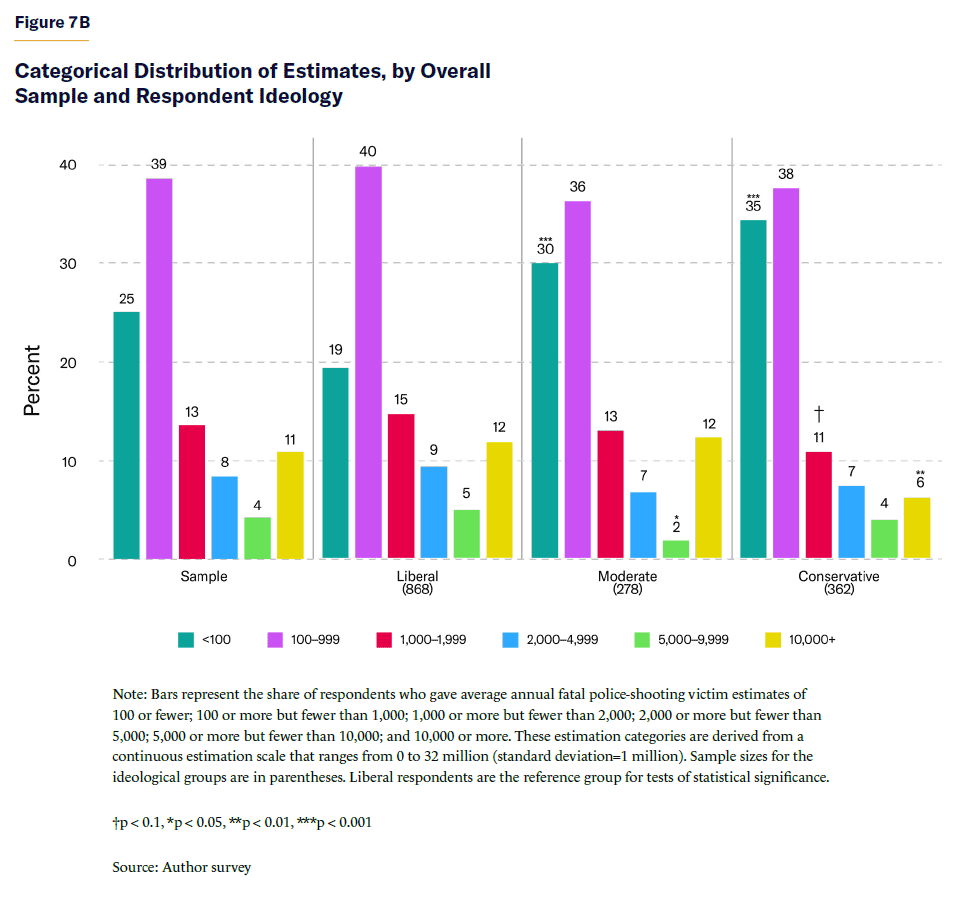

Figure 7B further elucidates this pattern by categorizing the continuous estimation scale into six ranges.

We observe that 64% of all respondents gave estimates of fewer than 1,000 average annual FOIS victims, including 25% who gave estimates of fewer than 100. Meanwhile, in the other direction, 11% of respondents gave estimates of 10,000 or more. These data also indicate that conservatives were significantly more likely to place into the “< 100” estimation category than liberals (35% vs. 19%), while liberals were twice as likely to place into the “10,000+” category as conservatives.

All told, these results are mostly at odds with expectations. Rather than significantly overestimating, the median respondent significantly underestimated the average annual number of FOIS deaths across the 2015–22 period. And while conservatives were, as predicted, more likely to underestimate than liberals, and liberals more likely to overestimate than conservatives (see Figure 7B), liberals’ estimates were actually more accurate (i.e., closer to the benchmark) overall than conservatives’ estimates.

These findings are difficult to square with those observed in the 2021 Skeptic survey, wherein 44% of liberals vs. 20% of conservatives estimated that 1,000 or more unarmed blacks alone were killed by police in 2019. This discordance[61] suggests that estimates of this sort are highly sensitive to differences[62] in question format and measurement scales. Future researchers of this topic should be mindful that estimates of the same phenomenon are unlikely to be reliably consistent across different measurement designs.

Estimates of the Racial Distribution and Unarmed Share of FOIS Victims

After estimating the average annual number of FOIS victims, respondents were informed[63] that, according to data from the U.S. Census Bureau, non-Hispanic white Americans, black Americans, and Asian Americans constituted an average of 61%, 12.5%, and 5.5% of the U.S. population, respectively, during 2015–22. With their estimates of the annual average number of FOIS victims given as a reference, they were then asked to estimate the white, black, Asian, and “other”[64] (i.e., nonwhite, nonblack, non-Asian) share of all police-shooting victims in an average year during 2015–22. Finally, respondents were separately[65] asked to estimate the average “unarmed” share (i.e., those unarmed when killed) of each group’s FOIS victims during this period.

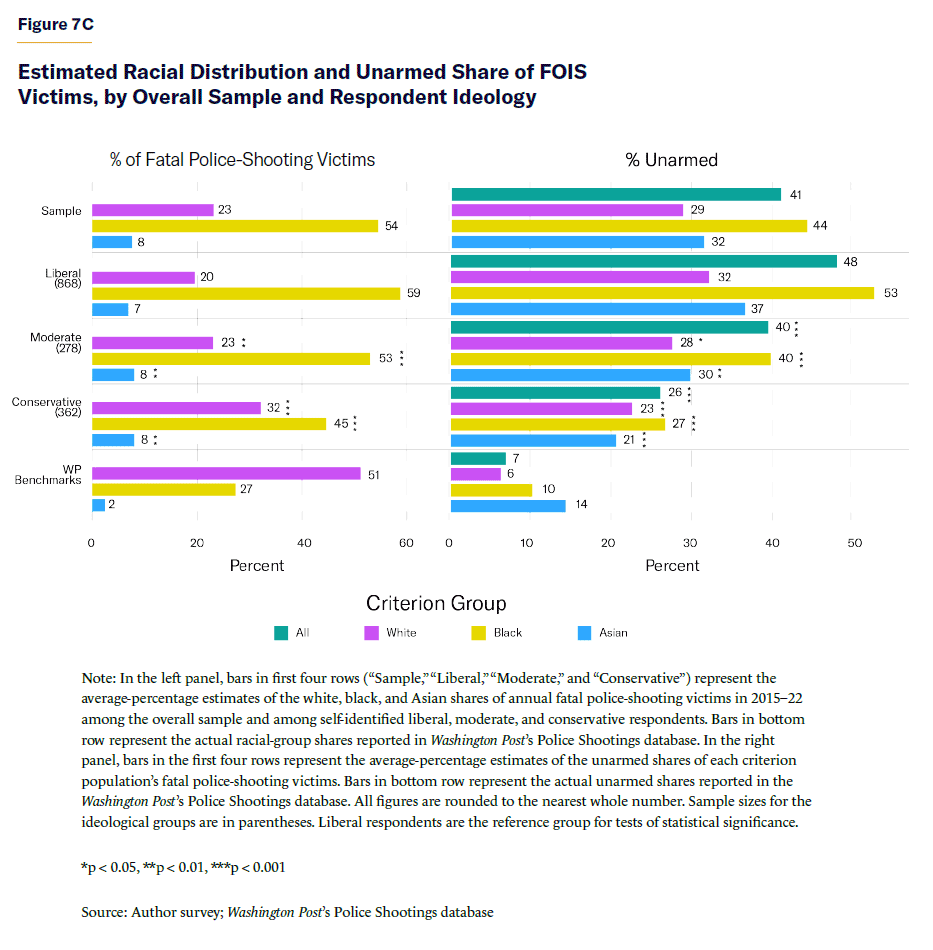

As predicted, Figure 7C shows that the average respondent substantially underestimated the white share and substantially overestimated the black (and, if to a lesser extent, the Asian) share of FOIS victims.

Specifically, according to the Washington Post Police Shootings database, white, black, and Asian Americans, respectively, constituted an average of 51%, 27%, and 2% of racially identified[66] shooting victims during 2015–22. But in the estimation of the average respondent, as found by this survey, white Americans constituted an average of 23% (an underestimate of –28 points), black Americans 54% (+27 points), and Asian Americans 8% (+6 points) of all victims. Further, while respondents across all ideological groups overestimated and underestimated the black and white shares, respectively, liberal respondents did so to a significantly greater degree than conservatives.

Just as they misestimated the distribution of FOIS victims’ race, respondents (particularly liberals) similarly misestimated the unarmed share of fatal police-shooting victims.

Per the Washington Post data, an average of 7% of all FOIS victims—including 6% of white, 10% of black, and 14% of Asian[67] victims—were unarmed when shot and killed by police during 2015–22. The average respondent, however, overshot these benchmarks by 18–34 points, with the largest average overestimates given for black victims (+34). Overestimates were also again greater—generally double in size—among liberal than conservative respondents. For instance, whereas the average conservative estimated that 27% of black victims were unarmed, the average liberal estimated that more than half (53%) were.

In sum, respondents[68] generally underestimate what the Skeptic data depict, or have a much more reasonable sense of the number of FOIS victims than what is depicted in the Skeptic data. Yet the current results also suggest that their estimates of the racial distribution of fatal police-shooting victims and of the extent that such victims are unarmed are significantly distorted. This is especially the case among liberal respondents. As will be described later, misestimates of these distributions may be just as important as—if not more important than—respondents’ perceptions of the problem and their policy preferences as to the sheer volume of cases.

A Secondary Analysis

While this report’s primary analyses focus on estimations related to police use of force and its racial disparities, it is important to note that the concern that respondents associate with their estimates—i.e., whether they consider perceived levels of force and racial disparities “serious problems”—may be shaped by their beliefs about the necessity of police use of force and what they think might be the cause of racially disparate policing outcomes.[69]

With this in mind, respondents were additionally asked to estimate: (a) the share of all violent crimes reported in 2021 attributed to offenders of different racial groups; and (b) the violent-offender share of the U.S. prison population. I briefly summarize the main results here and relegate a more complete and detailed discussion of these secondary analyses to Appendixes A.3 and A.4.

The first of these analyses found that respondents generally underestimated both the black and white American share of 2021 violent crimes reported to the FBI’s National Incident-Based Reporting System (NIBRS). Liberals underestimated the black share to a greater extent than conservatives; conservatives underestimated the share attributable to white offenders to a greater extent than liberals.[70]

In the second analysis, respondents underestimated the violent-offender share of the U.S. state prison population, with this tendency being most pronounced among liberals. While BJS puts the share of violent offenders at a little under 60%, nearly half (48%) of all liberal respondents gave estimates of 40% or less. In contrast, while conservatives were more likely to give large (80%+) overestimates, the share of conservative respondents (14%) who did so was comparatively tiny.

All in all, these secondary analyses reveal patterns of survey respondents having inaccurate estimates. These patterns could inform the extent that people are alarmed by police use of force and racial disparities in policing outcomes. Moreover, they provide additional evidence that estimation inaccuracies are generally universal but differ in degree, on account of political ideology.

A comprehensive summary of these estimation inaccuracies and ideological differences is provided in Appendix A.5.

Part 3: Applying the Treatment to Respondents’ Estimates

So far, this study has shown that respondents’ perceptions of police use of force and its victims are often disconnected from reality and that the perceptions of conservatives tend to (with some exceptions) be mildly to moderately more accurate than those of liberals. These findings are interesting, but we might ask, “So what?” Do the misperceptions that I document have any practical significance? Do they inform people’s opinions of the severity and scale of police violence and their issue-related policy preferences? Or are they nothing more than wild guesses and expressive partisanship?

Experimental Design and Procedure

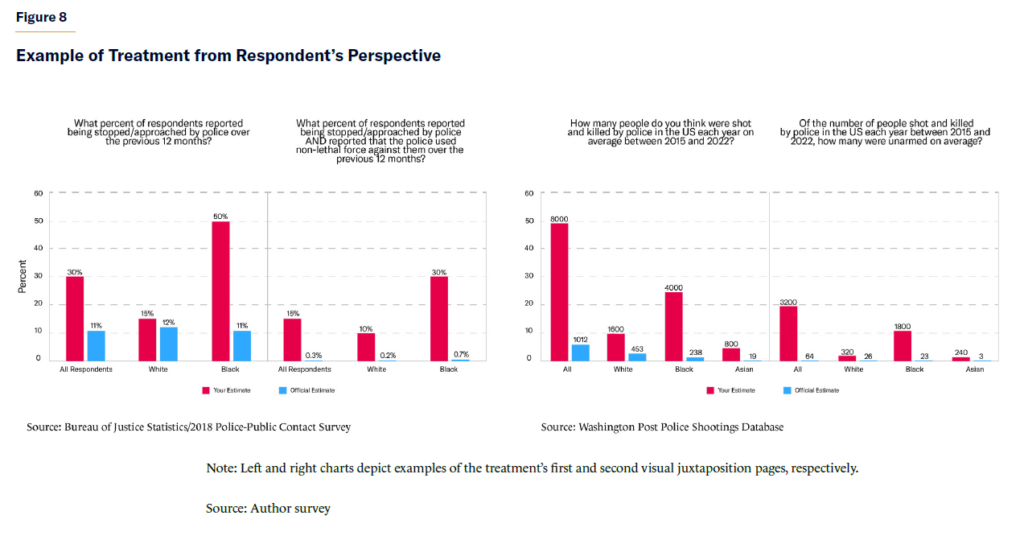

To answer those questions, we must study how attitudes change in the face of information that conflicts with those attitudes. To this end, after completing the estimation modules, respondents were randomly assigned to either a treatment or control group. Those assigned to the treatment group were told that they’d next be shown “a number of charts that visualize how far off (their) estimates were from official estimates,” which were “generally derived from data reported by the U.S. Bureau of Justice Statistics.” Then respondents were shown individualized visual juxtapositions of all their explicit and implicit (derived from their explicit) criterion estimates with the official benchmarks.

Examples of the first two individualized visualization pages—pertaining to estimates of the use of force—are displayed in Figure 8. In this specific case, the respondent would first be made aware of the fact that, among other biases, his/her estimate of the percentage of all black PPC respondents who reported being stopped by the police and reported being subjected to nonlethal force was more than 40 times larger than what was reported in the 2018 PPC survey. The next visualization page would inform the respondent that he/she substantially overestimated the annual number and share of black FOIS victims as well as the number and share of black victims who were shot unarmed.

A third and final visualization page (see Appendix B.5) would compare the respondent’s estimates of racial-group violent-crime shares and the violent-offender share of the state prison population with their respective benchmark estimates.[71]

After viewing all three pages, treatment-group respondents were subsequently asked—in multiple-choice format—to recollect several of the official estimates that they were shown. “From the information (they) just reviewed,” respondents were asked to indicate: (a) the percentage of white and black Americans who reported being both stopped or approached by the police and being subjected to nonlethal force in 2018; (b) the number of unarmed white, black, and Asian Americans who were shot and killed by police each year on average between 2015 and 2022; and (c) the percentage of all violent crimes in 2021 that were committed by white, black, and Asian Americans. This was done to test and reinforce the absorption of the information, but also to identify respondents in need of “re-treatment,” as respondents who selected an incorrect answer option[72] were notified of their error and shown the correct answer.

Respondents assigned to the control group, in contrast, received no such visual juxtapositions. They were simply directed to an honesty check[73] and proceeded to answer questions about the prevalence and severity of police violence and their support for specific policing policy proposals. These attitudinal measures are further described below.

How I Measured the Survey Results

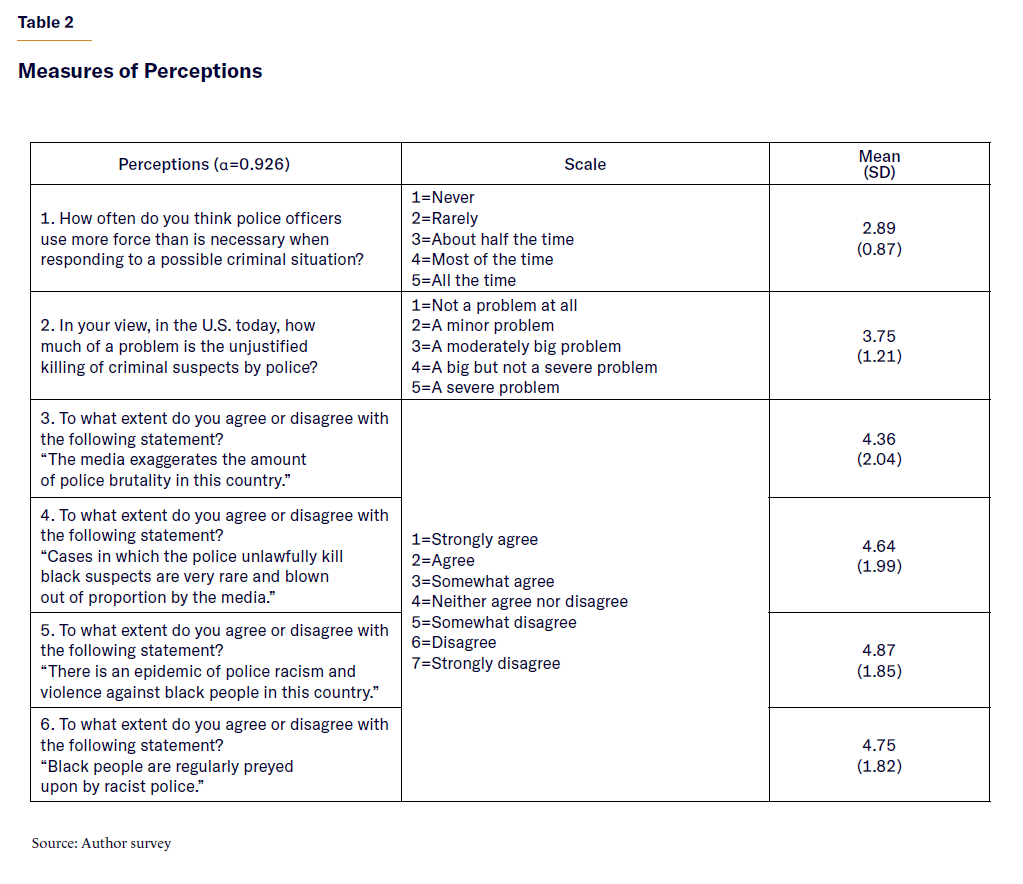

Respondents’ General Perceptions of Police Brutality and Racism

Upon completing an honesty check, respondents were asked to respond to six questions and statements designed to capture their appraisals of the severity and prevalence of: (a) excessive police force in general, including unjustified killings of criminal suspects; and (b) police racism and violence against black Americans in particular. All six items are reproduced in the first column of Table 2. Together, they form a very reliable (⍺=0.926), though not necessarily unidimensional,[74] index, which I employ in the forthcoming analysis.

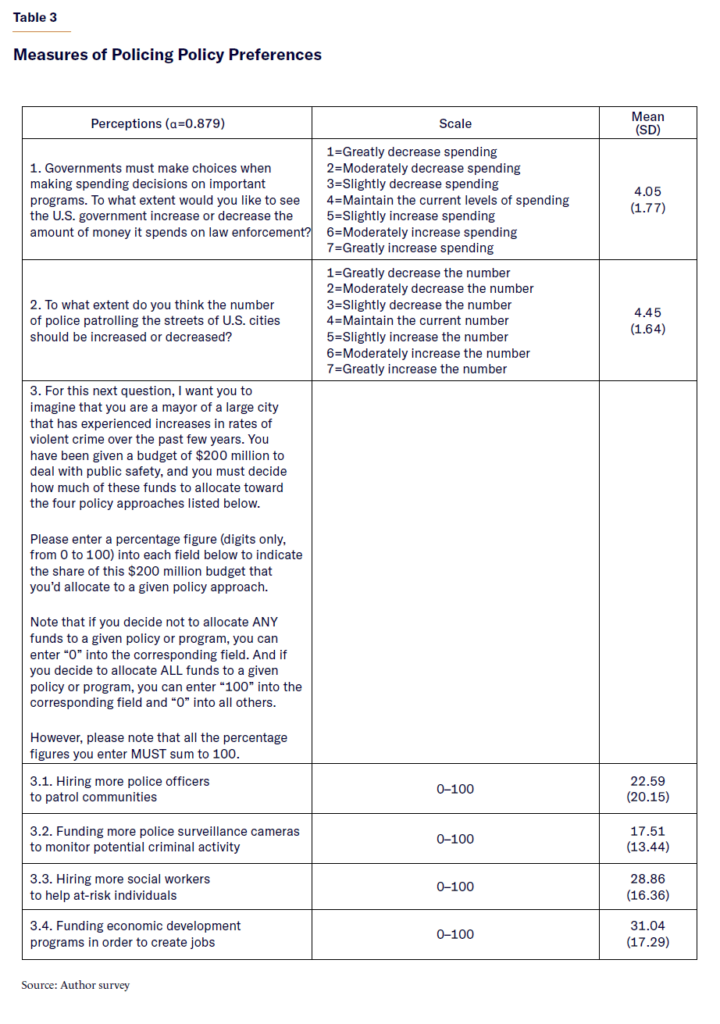

Respondents’ General Policing Policy Preferences

After completing the measures of perceptions, respondents were asked a series of three policy-related questions (Table 3). The first two questions measure the extent that respondents think that federal spending on law enforcement and the number of police patrolling the streets of U.S. cities should be increased or decreased, respectively. A third and final measure, which was adapted from Cohen et al.,[75] is designed to force respondents to make policy trade-offs.

It asks respondents to play the role of a mayor of a large city that has experienced upticks in violent crime. Respondents are given a $200 million “public safety” budget and must decide how much of it to spend on four anticrime policy approaches. Two of these approaches—hiring more police officers to patrol communities and funding more crime-detection cameras—are “carceral” in nature, in that they focus on traditional law-enforcement methods for deterring and responding to crime. The other two—hiring more social workers to assist at-risk individuals and funding economic development programs—are “softer” measures that aim to address “root causes” and socioeconomic contributors to crime.

While all policy items will be modeled individually, I also combine and model them as an index. To construct this index, I first sum the two carceral allocation items[76] to create a combined “carceral allocations” scale. This scale and the two ordinal measures are then z-transformed (i.e., to a mean of 0 and a standard deviation of 1) and subsequently averaged together.

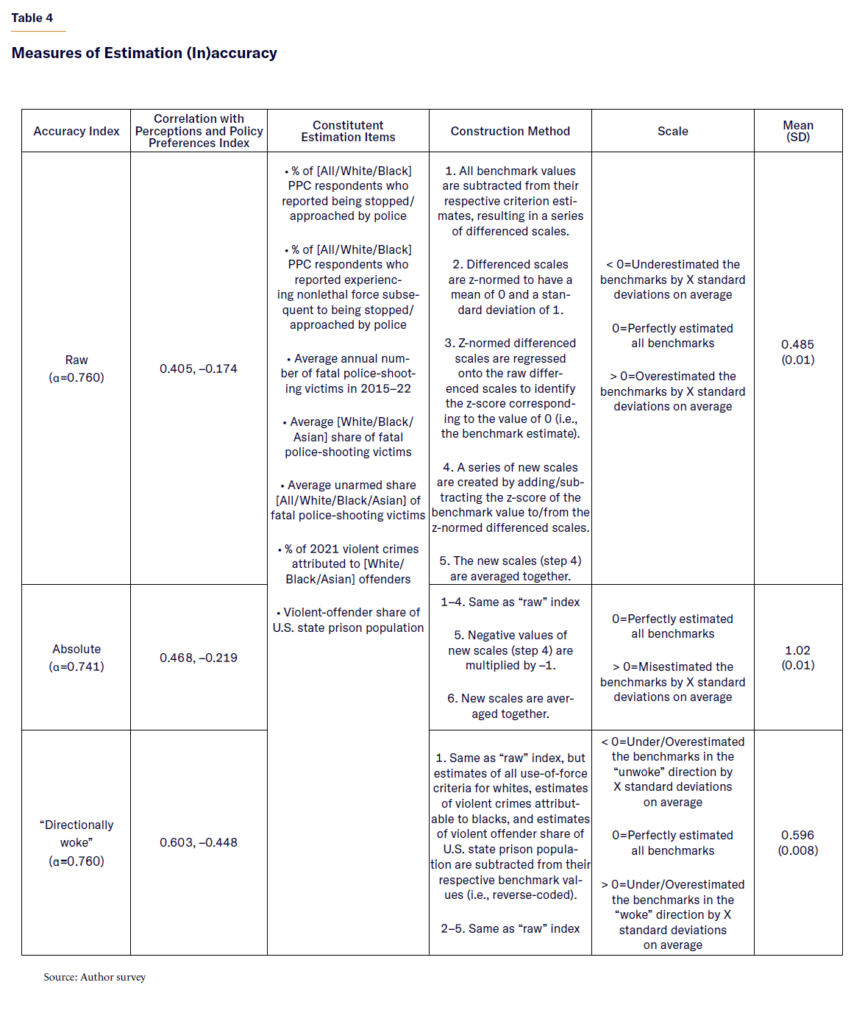

Respondents’ Estimation (In)accuracy

Both the size and direction (i.e., positive or negative) of the treatment’s effects on perceptions and policy preferences are expected to vary, depending on the magnitude and direction of the respondents’ estimation errors. To test this prediction, I created a summary estimation (in)accuracy index that indicates how far off respondents’ estimates were from the benchmarks on average. Table 4 presents three ways of constructing such indexes, which I further discuss and analyze in Appendix A.5.[77]

I report the results of these analyses in Appendixes A.6 and A.7. But ultimately, the index that best captures respondents’ estimation patterns and best predicts their general perceptions and policy preferences is the third index, which I refer to as the “directionally woke” estimated (in)accuracy index. This method reverse-codes: (a) all use-of-force criterion estimates for whites; (b) estimates of the share of 2021 violent crimes attributable to blacks (see Appendix A.3); and (c) estimates of the violent-offender share of the U.S. state prison population (see Appendix A.4).

I adopt the “directionally woke” estimation (in)accuracy index for some of the experimental analyses that follow.

To understand the scores on this “directionally woke” (in)accuracy index, consider the index’s hypothetical endpoints. For instance, a maximally “directionally woke” respondent is one who estimates that police use of nonlethal and lethal force is omnipresent and exclusively directed against nonwhites, that all (nonwhite) fatal police-shooting victims are unarmed, that whites constitute all of the country’s violent criminal offenders, and/or that the U.S. state prison population is exclusively filled with nonviolent offenders.

Conversely, at the hypothetical bottom of the scale, a maximally “directionally unwoke” respondent is one who perceives that police use of force either never occurs or is exclusively directed against whites, that no fatal police-shooting victims are unarmed or that only white victims are unarmed, that nonwhites commit all violent crimes, and/or that the U.S. state prison population exclusively consists of violent offenders.

Of course, virtually no respondents meet these extreme descriptions. What the “directionally woke” index measures, then, is the extent that the respondents’ estimates exhibited the tendency to be “woke” or “unwoke.” Accordingly, a (hypothetical) score of 0 on the index denotes a respondent who perfectly accurately estimated each of the criterion benchmarks, while scores above and below zero represent the extent that respondents misestimated in the “woke” and “unwoke” directions, respectively.

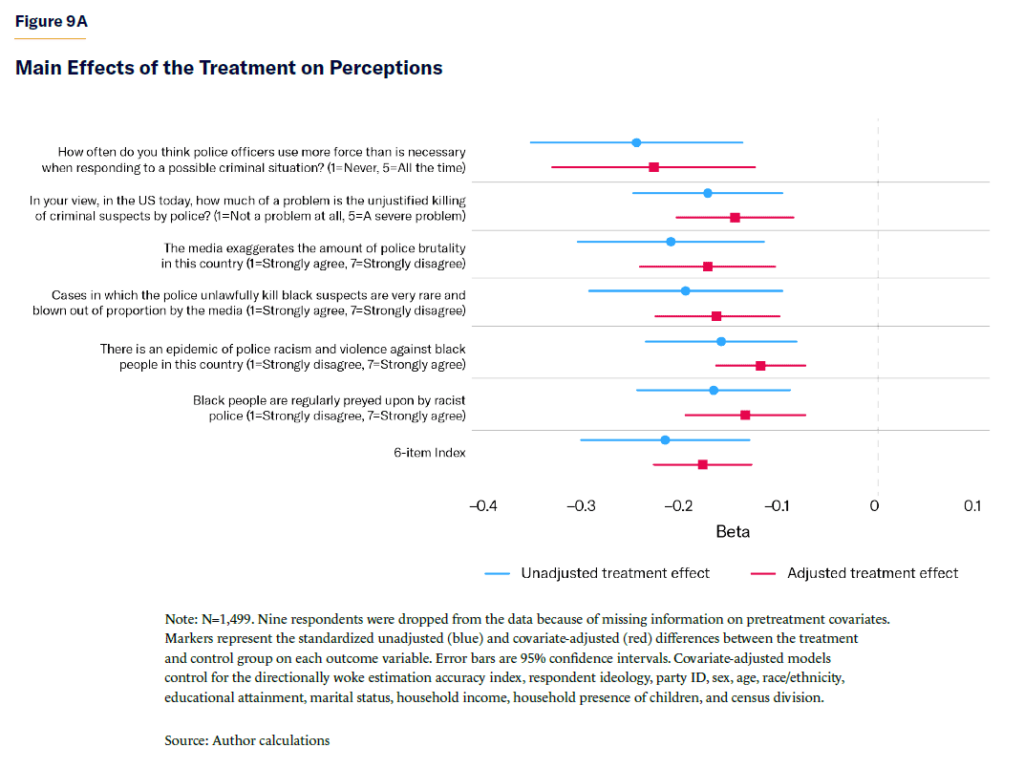

Main Treatment Effects on Perceptions

To assess the overall impact of the treatment on perceptions of police brutality and racism, each of the six perception indicators and the combined index were regressed separately onto a treatment dummy variable. This variable labeled respondents who received the informational intervention as “1,” while all others, comprising the control group, are coded as “0.” To enhance the precision of the treatment’s estimated effects, “covariate-adjusted” models were also developed that control for all pretreatment variables,[78] including the directionally woke accuracy index.

The treatment group consistently scored significantly lower across all outcomes (Figure 9A), with all p-values < 0.001. The differences for individual items range from 0.159 to 0.244 standard deviations (SD) in the unadjusted models and 0.115 SD to 0.224 SD in the adjusted models.[79] When all six z-normed items are averaged together, the unadjusted and adjusted differences are 0.191 SD and 0.157 SD, respectively, indicating small effect sizes. Importantly, these differences are not merely attributable to shifts within response categories (e.g., “Strongly disagree” –> “Disagree”) but also to significant movement between them.[80]

For instance, the share of respondents who answered “Never” or “Rarely” when asked how often they think that police officers use more force than necessary when responding to a possible criminal situation was nearly 10.5 points (adjusted=9.4, p=0.001) higher among those who received the treatment than respondents in the control group. Treatment-group respondents were also 11.3 points (adjusted=9.3, p < 0.001) less likely to disagree that the “media exaggerates the amount of police brutality” and 9 points (adjusted=7.1, p=0.001) less likely to agree that black people “are regularly preyed upon by racist police.”

These findings suggest that the informational intervention induced genuine attitudinal changes, rather than merely reinforcing or tempering existing views.

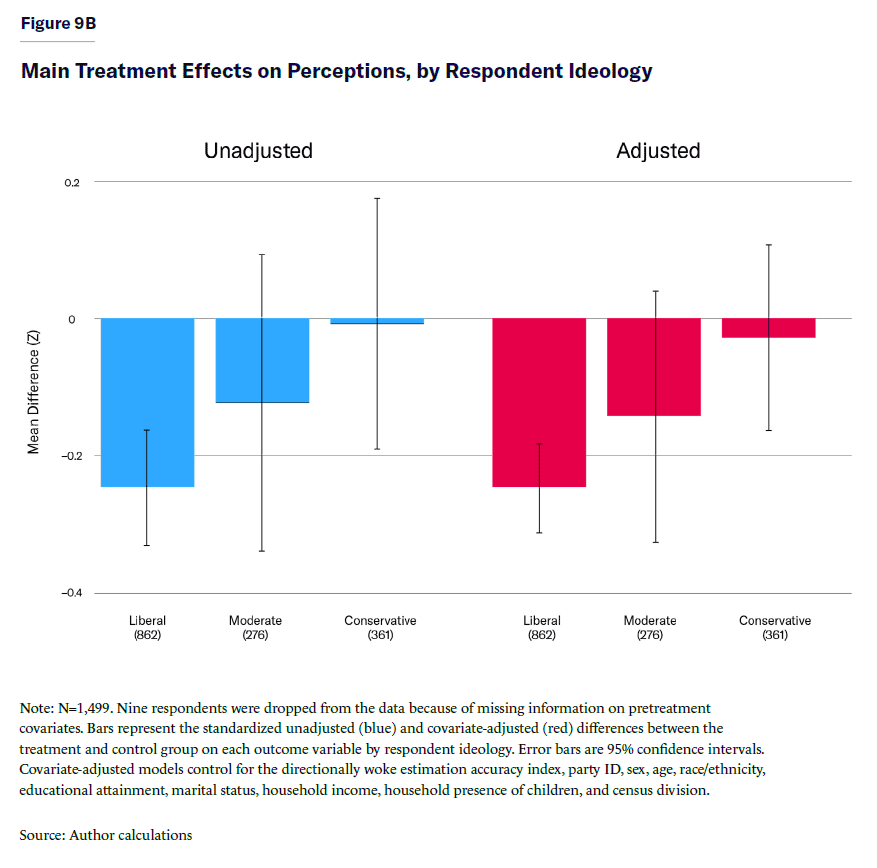

Interestingly, the intervention’s effects[81] on the perceptions index were nearly seven times greater[82] for liberal than for conservative respondents, with the effects on the latter close to zero (Figure 9B). This discrepancy could be partially due to a “floor effect,” as conservative scores on the perceptions index are already so low[83] that they cannot decrease much further. Additionally, conservatives’ relatively smaller average criterion misestimates might be implicated in their null treatment response, as suggested by the results in the next section.

At this point, it is important to note that the effects of the treatment on respondents’ estimation accuracy were negative and statistically significant, regardless[84] of whether respondents overestimated or underestimated the annual average number of FOIS victims. This suggests that people’s perceptions of police brutality are not exclusively, or even mostly, tied to their (mis)estimates of the overall quantity[85] of FOIS victims. Rather, (mis)estimates of how the number of victims is distributed across racial groups—along with overestimates of the prevalence of nonlethal force against black Americans—appear to play a significant role in shaping these perceptions.

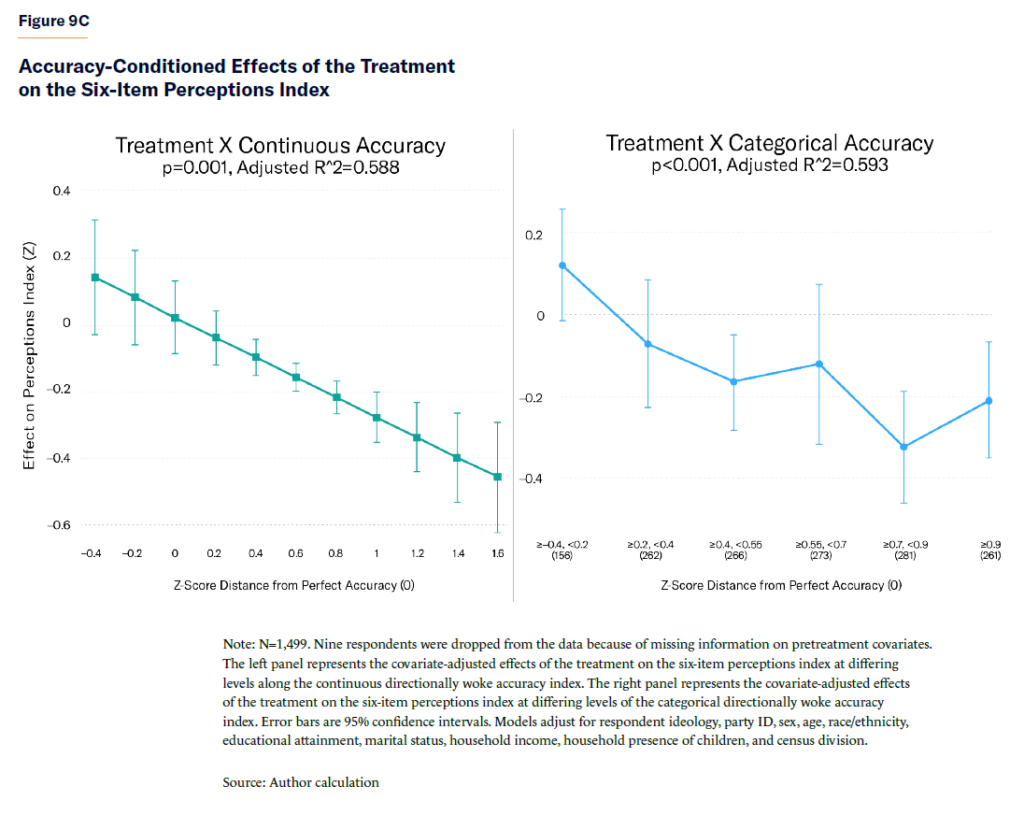

Accuracy-Conditioned Treatment Effects on Perceptions

While the main effects of the treatment are notable, their impact is expected to be even greater on those who gave relatively larger misestimates on average. This would explain why the effects of the treatment are significantly larger among liberals—who score highest[86] on the directionally woke accuracy index—than conservatives.

To test this hypothesis, two interaction models[87] were fitted (Figure 9C). The first model (left panel) interacts the treatment with the continuous directionally woke accuracy index.[88] This model reveals a statistically significant interaction (b=–0.299, p=0.001), suggesting that the intervention’s negative effects on perceptions grow linearly[89] as estimation inaccuracy rises. But the model that uses a categorical[90] accuracy interaction term (right panel) exhibits a superior[91] fit to the data.

Results from this latter model indicate that the intervention led to a nonsignificant 0.118 SD (p=0.084) increase[92] overall in perceptions of police brutality and racism among respondents within the bottom accuracy interval (i.e., ≥ –0.4, < 0.2), which, due to their smaller numbers,[93] combines “directionally unwoke” misestimators and those whose estimates were reasonably close to the benchmark figures on average.

In contrast, at all higher intervals, the intervention progressively and nonlinearly reduces such perceptions. For those in the middle (in)accuracy intervals (i.e., ≥ 0.2, < 0.7), the intervention led to a combined 0.113 SD (p=0.005) decrease in perceptions of police brutality and racism. These negative effects increase to a combined 0.262 SD (p < 0.001) among those in the highest two intervals (i.e., ≥ 0.7). For example, respondents in these intervals who received the intervention were 17.6 points (p < 0.001) less likely to believe that the police often use excessive force, 15.7 points less likely (p < 0.001) to consider the unjustified killing of suspects by police to be a serious issue, and 11 points less likely (p < 0.001) to agree that black people are regularly targeted by racist police.

Overall, these results largely validate the prediction that the intervention’s effects will vary as a function of respondents’ estimation accuracy. In general, the more respondents misestimated the various criteria in the “woke” direction, the more effective the intervention was in reducing negative or “woke” appraisals of the prevalence and severity of excessive police force and racist policing.

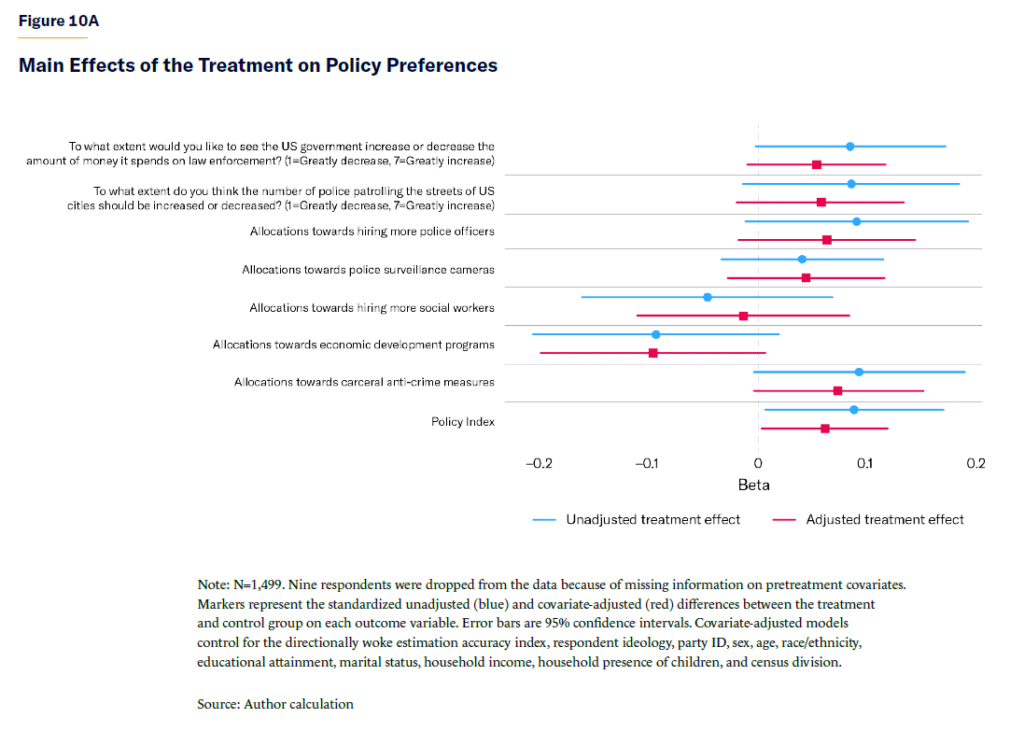

Main Treatment Effects on Policy Preferences

In the previous section, I considered how the treatments resulted in updated appraisals of police use of force. Now, I turn to the question of whether those updates translate into changes in policing-related policy preferences.

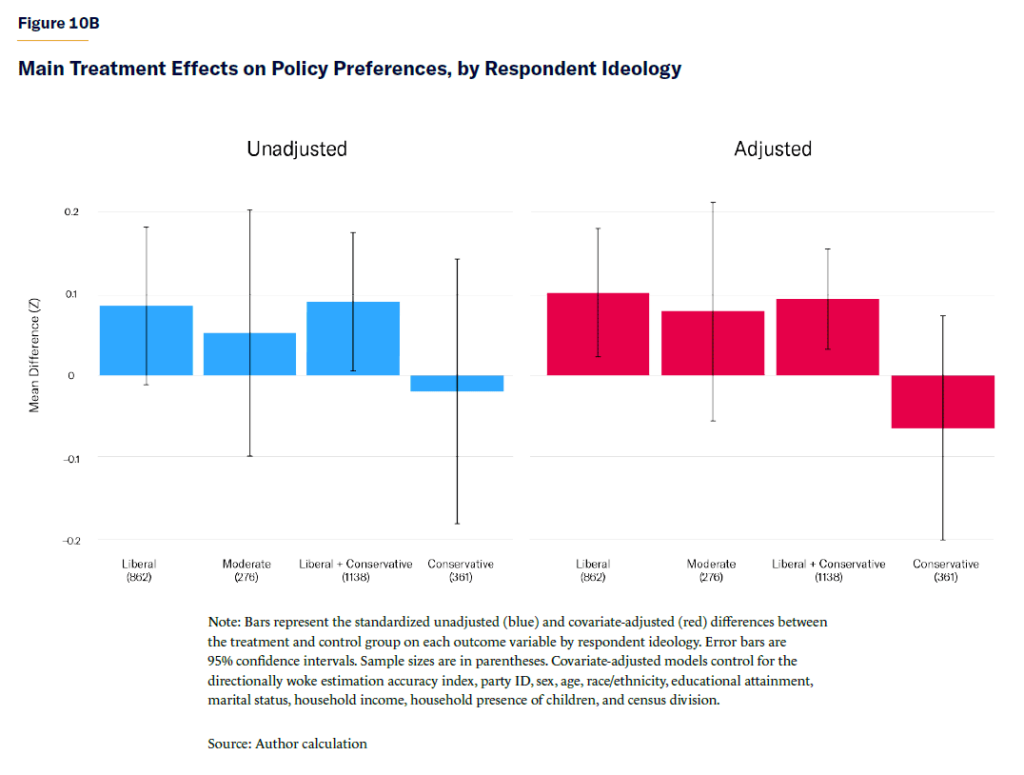

The standardized differences between the control and treatment groups on each policy measure are presented in Figure 10A. Compared with those observed for perceptions, these differences are smaller and less precise, ranging from 0.04 SD to 0.094 SD in size in the unadjusted models and from 0.014 SD to 0.096 SD in the adjusted models. While all are in the theoretically expected direction, none is significant at the p < 0.05 threshold, though several do approach it.[94]

When all items are considered in aggregate as an index, a more discernible, if still very modest, signal emerges. Compared with the control group, respondents who received the intervention scored an unadjusted 0.088 SD (p=0.037) and adjusted 0.061 SD (p=0.041) higher on the combined index, with these differences reaching significance at the 95% level. Further, and as expected, results from a mediation analysis (reported in Appendix A.8) suggest that almost all (95.6%) of these differences are attributable to the intervention’s negative effects on the perceptions index. In effect, the increases in support for policing-centered anticrime policies that are observed appear to be primarily driven by the intervention’s reduction of perceptions of police brutality and racism.

Although modest overall, the intervention’s effects are again larger[95] among liberals and moderates (and, unexpectedly, black[96] and Hispanic respondents) while smaller and statistically insignificant among conservatives. As Figure 10B (right panel) shows, liberals and moderates who received the intervention scored 0.101 SD (p=0.012) and 0.078 SD (p=0.242) higher, respectively—or 0.093 SD (p=0.003) higher when combined—on the policy index, compared with their control-group counterparts. Meanwhile, conservatives who received the intervention scored slightly more than –0.06 SD (p=0.354) lower than those who did not, though this difference is not significant.

Thus, as with perceptions, the treatment had a stronger effect on the attitudes of nonconservatives than conservatives. While some of this could, again, be attributable to a floor effect, it likely also stems from conservatives being more prone to place at the bottom of the “directionally woke” accuracy index, where the treatment effects are expected to be smaller or to move attitudes in the other[97] (i.e., liberal) direction. This expectation is formally tested again in the interaction models below.

Accuracy-Conditioned Treatment Effects on Policy Preferences

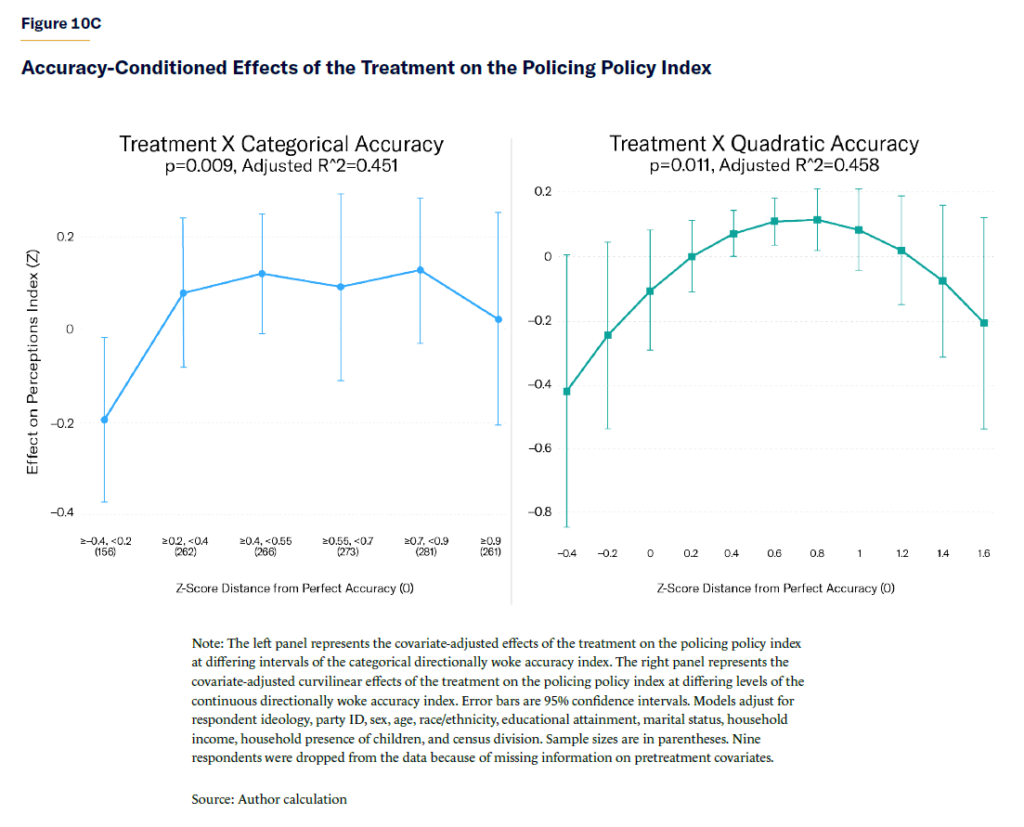

Earlier, this report stated that when the interventions had a negative impact on respondents’ perceptions of police brutality and racism, these tended to be stronger for those with relatively larger “directionally woke” misestimates. However, the results of a treatment x categorical accuracy interaction model (left panel of Figure 10C) indicate that a more curvilinear relationship holds for the intervention’s effects on policing policy preferences.

In the bottom accuracy interval (≥ –0.4, < 0.2), respondents who received the intervention scored 0.197 SD lower (p=0.03) in support for policing-centered anticrime policies, which aligns with expectations and mirrors the pattern observed for perceptions. The effects begin to grow nonlinearly in the pro-policing direction at subsequent intervals. Across the intervals running from 0.2 to 0.9 standard deviations above perfect accuracy—encompassing approximately 72% of the data—respondents who received the intervention scored a combined 0.102 SD higher (p=0.006) on the policy index than those who did not. Moreover, this difference is not only driven by movement within response categories (e.g., “Strongly decrease” --> “Moderately decrease”) but also by changes between them. For instance, respondents in these intervals who received the intervention were 5 points (p=0.026) more likely than their control-group counterparts to support increasing the number of police patrolling the street in U.S. cities. They also allocated 4 points (p=0.004) more of their budgets toward policing-centered anticrime measures.

But rather than continuing to rise, the treatment’s effects plummet at the highest interval (≥ 0.9) such that respondents who received the intervention go from scoring 0.125 SD (0.7, < 0.9) to just 0.02 SD higher on the policy index (though this difference is not significant, p=0.5). Given this unexpected curvilinear pattern, I opted to test whether a curvilinear model with a quadratic continuous accuracy term better fit the data. The results from this model, which are plotted in the right panel of Figure 10C, show that it is a better fit.[98]

According to this curvilinear model, respondents at the bottom of the accuracy index who received the intervention score –0.422 SD lower on the policy index than control-group peers, though this difference falls just short of conventional levels of significance (p=0.05). The difference then trends and grows in the positive or pro-policing direction, peaking at 0.8 standard deviations above perfect accuracy, at which point respondents who received the intervention score 0.109 SD (p=0.025) higher than those who did not. Thereafter, though, the effects begin to weaken and point in the antipolicing direction.[99]

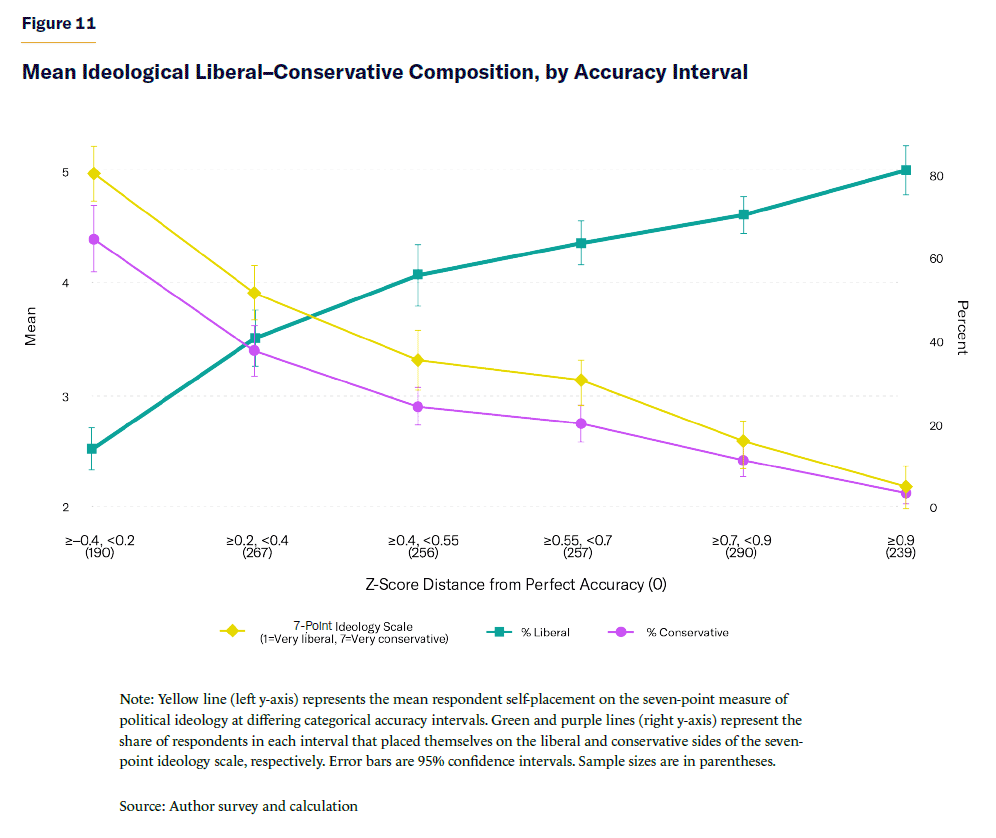

The reasons for this unexpected diminishment and directional shift of the treatment’s effects at the upper end of the accuracy distribution are worthy of further investigation. One possible explanation for this phenomenon is that this region of the accuracy index comprises the most ideologically liberal[100] subset of respondents, who may be more resistant to the treatment.

Approximately 81% of respondents in the highest (in)accuracy interval identify as “liberal” (Figure 11)—70% of whom identified as “very liberal” (37% of overall respondents) or “liberal” (33% of overall respondents), as opposed to “slightly liberal” (11% of overall respondents). In contrast, just 3% of respondents in this interval identified as conservative. This raises the question of how stronger ideological liberalism might moderate (if not altogether neutralize) the treatment’s effects on perceptions and policy preferences. I relegate my speculative answers and suggestions for future research to the endnotes.[101]

This unresolved question about the reasons for the treatment’s curvilinear effects aside, the results from these interaction models help explain why the main effects of the treatment on policy preferences bordered on insignificance. For respondents in all but the bottom and top accuracy intervals (i.e., 72% of the sample), the treatment led to a significant, if small (0.102 SD, p=0.006), increase[102] in support for policing-centered anticrime policies. But because the treatment had the opposite-to-no effect for those at the lowest and highest levels of the accuracy index, the overall effect (0.061 SD, p=0.041) was weakened.

Part 4: Implications for News Coverage

Given its rarity, the phenomenon of police brutality is, and likely will always be, an abstraction in the minds of all but a tiny segment of the American public. While people can roughly gauge broader economic conditions, for instance, via the prices they pay at the pump and their sense of job security, there are far fewer direct cues for Americans to lean on when it comes to gauging the seriousness of the problem of police brutality. This means that the public is almost entirely reliant on news media and political elites for assessing its prevalence and severity. This reliance makes public opinion susceptible to manipulation.

The current study documented how appraisals of police use of force have likely already been manipulated, as well as evidence for the manipulability of such appraisals in general.

In the first case, the widespread perception that black Americans, a racial group that constitutes just 12%–13% of the U.S. population, make up the majority of FOIS victims is not—or is highly unlikely to be—born out of thin air. The public does not come to that misperception unaided. Nor do they spontaneously adopt the misperceptions that: (1) roughly half of all black victims are shot unarmed; or (2) one in three blacks stopped by police—and close to 1 in 5 black Americans overall—is subjected to nonlethal physical force in a typical year. More likely, these perceptions are the consequences of a media environment that has flooded the public with more news about police brutality in the past decade than the previous five decades combined, and also largely sidelines nonblack victims of police violence.

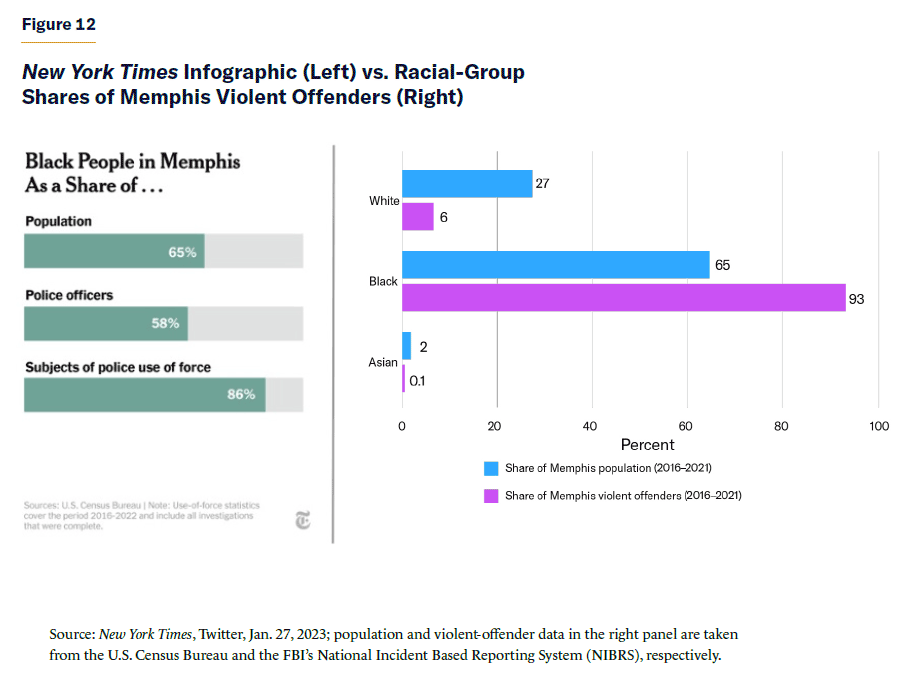

But the volume and racial skew of media coverage may not be the entire story. The findings of this study suggest that what context is given or omitted is also important for influencing perceptions. Often, the contextual information that journalists provide is typically limited to data highlighting racial disparities in the experience of nonlethal and lethal police force. For instance, readers and viewers might be told that blacks are “three times more likely” than whites to be killed by a police officer in a given year, but they are only rarely given any data speaking to the prevalence of this phenomenon among these populations overall.[103] Nor are they provided with data that are highly relevant to understanding racial disparities in police use of force, likely because those data might reflect negatively upon black Americans.

A recent textbook example of this tendency is an infographic published by the New York Times following the January 2023 killing of Tyre Nichols (Figure 12, left panel). Not only does it not give any data on base rates, such as the percentage of Memphis blacks and whites with police contact who were subjected to police force; it excludes data that are most relevant to understanding the disparity that it highlights—namely, the even larger overrepresentation of black Memphis residents among the city’s violent criminal offenders.

Such reporting practices likely cultivate or reinforce the misperception that excessive police force against black Americans is pervasive and that black (rather than white) Americans are the most frequent victims of excessive police force. These misperceptions, in turn, can have significant psychological and social costs, particularly for socioeconomically disadvantaged groups that need police the most.

Among black Americans, for instance, these misperceptions can lead to widespread fears that they or their family members will be victimized by police—fears that are not only unwarranted, given the actual risk, but that could incur a psychological toll and discourage them from contacting police when necessary. Among the general public, they erode trust in—as well as the perceived legitimacy of—the police, which can inspire mass protests, economically costly social unrest, and greater support for de-policing policies that threaten public safety.

Importantly, the negative effects of misperceptions are likely compounded by the impact on the police themselves. Public backlash against the police can lead police officers to engage in less proactive policing, resulting in fewer stops and arrests, with some studies linking these outcomes to increases in crime. Moreover, it can weaken police morale, leading to retirements and staffing shortages, which can further exacerbate crime.

To be clear, this discussion should not be taken to mean that the pursuit of greater police accountability and reform is uncalled for or undesirable. Rather, these objectives can be pursued without distorting public perceptions of the scale and racial distribution of police use of force.

Activist groups have an interest in distorting the view that Americans might have of police brutality. Inflating public perceptions of the severity of the problem draws more attention to it. This attention can increase pressure on political leaders to act, and can encourage monetary donations from outraged members of the public. But this cynical approach can also incur substantial social costs and inspire the enactment of policing policies that are more informed by emotion than by actual data. Activist groups need to be made aware of these costs.

The findings of this study do not necessarily imply that news organizations and journalists should ignore or significantly reduce their coverage of use-of-force incidents. Instead, the implication here is that they should provide more context and information in their coverage. This will at once deracialize police use of force and put such incidents into a broader perspective. Also, given the social costs, it is incumbent on news organizations to refrain from reporting that inadvertently fuels or validates misperceptions about police violence.

Recommendations

More informative and less divisive use-of-force coverage is possible. Four recommendations to that end include:

First, journalists should contextualize their reporting of police use-of-force incidents by providing data on the rarity of such incidents. While this practice should be adopted in general, it is particularly important when referencing racial disparities in use of force. Group base rates should not be left to the audience’s imagination. Instead, journalists should clearly note them and allow their audiences to decide for themselves whether (for instance) a 0.6% occurrence rate among black Americans vs. a 0.2% occurrence rate among white Americans constitutes a “severe problem.” They could, in theory, even embed within articles base rate–related estimation questions and corresponding “misestimate” visualizations like the ones tested here. While base rates may not matter for the assessments of some (especially extremely liberal) readers/viewers, this study suggests that they are relevant to, and are likely to inform, the assessments of many others.

Second, if journalists do choose to highlight racial disparities in police use of force, they should not do so in isolation of the disparities that contribute to them, such as differing rates in criminal offending. In other words, they should not make the mistake of the NYT infographic in Figure 12. If disparities in criminal offending are not provided for context, readers/ viewers will have little choice but to attribute those in use of force to racial bias, which is likely to undermine their trust in police. While racial bias may play some role in use-of-force disparities, there is no good evidence of it being the dominant factor. Journalists should thus not lead their audiences to think that it is.

Third, journalists who highlight racial disparities should not limit the focus to those between black and white Americans. For instance, use-of-force rates against Asians—which are significantly lower than those against whites—should also be reported on. While it’s unclear[104] whether their inclusion in the current study’s informational treatment made a difference, reporting rates for Asians could help combat the notion that use-of-force disparities necessarily reflect racial bias.

Fourth, news organizations and journalists should increase their coverage of use-of-force incidents involving white victims. While underrepresented relative to their population share, white Americans still constitute the majority of both nonlethal and lethal use-of-force victims. Yet they receive but a fraction of the coverage given to black victims. Though stories involving the black Americans may generate more engagement from readers and viewers—if only because they fit a “racist police” narrative—the findings of this study suggest that the wildly disproportionate coverage that they receive substantially distorts public perceptions of the racial distribution of victims. Moreover, remember that the effects of the treatment were significant even for those respondents who underestimated the number of annual fatal police shootings. This suggests that public perceptions of this racial distribution may be just as influential[105] for the public’s appraisals of policing and policing policy preferences as the mere volume of victims. It is thus imperative that news organizations and journalists correct this severe racial imbalance in coverage. Doing so can counter the perception that police brutality is a phenomenon primarily suffered by black Americans; it could also help dispel perceptions and accusations of media bias among those on the right.

Police use-of-force incidents afflict Americans of all racial and ethnic backgrounds, and many of them should be treated as human tragedies. These recommendations could help bring about a healthier civic life and appropriate reform. However, I recognize that many of the incentives for news organizations to carry out these recommendations are lacking or are at odds with a bottom line that is measured more in terms of clicks and viewership than promoting accurate perceptions on social issues like police use of force.

Even if all news outlets and journalists were wholly on board with these recommendations, misperceptions of police use of force would still be promoted by political figures (including the current president),[106] activists, celebrities, and other opinion leaders.

The most realistic path to reform, then, consists of two steps:

First, news organizations and journalists should be pressured to cover policing issues in a more responsible and balanced fashion. They need to be informed of the misperceptions likely promoted by the manner in which the topic is typically reported on and their social harms. The findings of this study are a good start, but additional experimental research is needed to confirm them and bolster the case for reform.

Second, civic groups, nongovernment organizations, and even police agencies concerned with the spread of misinformation and misperceptions need to use social media fact-checking tools. Twitter’s (recently renamed “X”) new Community Notes feature, for instance, allows verified users to add context and information to tweets, thus putting a check on false or misleading information and rhetoric. This could have added much-needed context to the NYT tweet in Figure 12. The same should be done for tweets by public figures, such as NBA star Lebron James’s tweet that “We’re literally hunted EVERYDAY/EVERYTIME we step foot outside the comfort of our homes!”[107] As effective use of these tools will likely require a sustained and organized effort, civic groups and police agencies should consider establishing dedicated or collaborative teams of “community noters” that scour social media for and push back against false information about the frequency and nature of use-of-force incidents. One-off or intermittent interventions will not make a difference in the long term. Consistency and continued “re-treatment” are key.

There is no silver bullet for the misperception issues discussed here. We need to be realistic about the magnitude of change that can be achieved through perception-correcting interventions and the provision of additional context. These measures should not be regarded as substitutes for reforms that improve police accountability and transparency.

However, the current state of public perceptions of police use of force is clearly not tenable, nor is it conducive to the promotion of better public–police relations, race relations, and public safety. The findings of the current study suggest that informational and educational interventions—especially if sustained—can play a meaningful role in addressing the current public perception of police use of force and mitigating or moderating the social consequences.

About the Author

Zach Goldberg is a Paulson Policy Analyst who recently completed a PhD in political science from Georgia State University. His dissertation focused on the “Great Awokening,” closely examining the role that the media and collective moral emotions have played in recent shifts in racial liberalism among white Americans. At MI, his work deals with a range of issues, including identity politics, criminal justice, and understanding the sources of American political polarization. Some of Goldberg’s previous writing on identity politics in America can be found at Tablet and on his Substack.

In the summer of 2020, Goldberg joined MI president Reihan Salam, Columbia University professor Musa Al-Gharbi, and Birkbeck College professor and MI adjunct fellow Eric Kaufmann for a conversation on the “Great Awokening”—the strong leftward shift among white liberals on issues of racial inequality and discrimination, immigration, and diversity that has been taking place since 2014.

Appendix

Endnotes

Photo: MattGush/iStock

Are you interested in supporting the Manhattan Institute’s public-interest research and journalism? As a 501(c)(3) nonprofit, donations in support of MI and its scholars’ work are fully tax-deductible as provided by law (EIN #13-2912529).